Breaking headline: misunderstanding in public caused by scientists deluding society. Seemingly daily, a groundbreaking report makes an appearance in newspapers, social media, or online forums. “Scientists have proven that all past studies are flawed. New product found that will cause you to lose weight/boost your IQ/increase testosterone levels/cause cancer/ensure worldwide destruction” and so on. As a public comprised of consumers, we eat each article up and promise ourselves we will abide by the presented way of life. That is, until the new phase comes around.

The first step in identifying how these articles may be misleading you is to understand what knowledge the author has and what they are implying. The majority of the time, studies’ information will be based on a correlation. This is when two variables seem to be closely associated–the change in one accompanies a similar change in the other. If there has been an experiment however, they may have been able to prove causation–that the manipulation of one variable known as the independent variable is followed by an effect on a second variable named the dependent variable. If only a correlation is identified, you don’t have any knowledge of what is causing these variables to correlate, you only know that they do.

As Evelyn Lamb (2013) writes, articles in which a correlation is identified and presented in a form that suggests causality are probably just “commissioned to meet publication quotas ” (p. 3). As the printed word is now taken to be the most trustworthy source, a reader will often full-heartedly believe each news article they read, forgetting that the press is in fact a business. Their number one priority is selling the most papers or getting the most website visits. If implying causation makes a report sound more interesting, those in publishing may not hesitate to mislead.

These misconceptions are only further advanced by the fact that when two variables correlate with one another, we often assume that this means that one is causing the other. Everyday, those who understand this common fallacy cash in on the public’s ignorance. Marketers will convince you that you must purchase some new gadget to ensure a desired outcome, when in reality, their claims are only based on correlation. A politician may attempt to ensure you that their opponent is not suitable for the position as a seemingly bad outcome correlates with one of their competitor’s initiatives (Pease & Bull, n.d .). Do not be misled by each ambiguous pairing you see. To become an informed individual, it is key to understand that correlation does not imply causation.

Scientists understand that correlation can have a purpose in a plethora of different fields so long as it’s presented as such. These interactions are often much easier and cheaper to find than running an entire study. However, if it is useful to run an experiment, it is helpful to have knowledge that an association even exists between your variables before starting. Many different disciplines take advantage of the convenience that correlation provides. Insurance companies are able to decide on their rates based on data about associations between customer characteristics and accidents without having to run an experiment that may end in disastrous collisions. The government is able to associate certain attributes such as education with incomes, careers, and even likelihood of bribery and corruption (Pease & Bull, n.d.). Correlations can be used to analyze personal stocks or the overall market (PreMBA, n.d .). Given that they are not presented as causation; correlations can be useful.

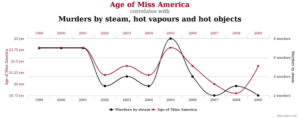

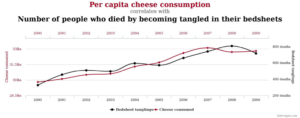

Even if correlations are presented as nothing more than an association, they could have occurred randomly or a confounding variable may be present. As can be seen in the following charts created by Tyler Vigen (2015) for his book “Spurious Correlations”, two variables may have a correlation coefficient of 99.26% and still have little to nothing to do with one another.

*correlation: 87.01%

*correlation: 94.71%

*correlation: 99.26%

With all of the data sets that exist, there is always the chance that two variables will match each other nearly perfectly without even a slight connection (Vigen). Another possibility is that a confounding variable may exist. This is a factor that affects both your independent and dependent variables. An infamous example of a confound creating confusion is a time when scientists found that the consumption of ice cream and the number of reported cases of polio had a statistically significant correlation coefficient. They then erroneously assumed that eating this dessert could cause the disease. Unfortunately, they did not realize that a confounding variable was present: summertime. During the summer, children are much more likely to cool down with some ice cream. They are also spending much more time playing together and their chances of spreading the disease are much higher (Sandler, 1948). When causation is assumed without experimentation, absurd conclusions can be reached.

Scientists now understand that an experiment is necessary to assign causation. Three facts you must find to be true in your experiment in order to assign causation are outlined in an article by Travis Hirschi and Hanan Selvin (1966) entitled “False Criteria of Causality in Delinquency Research ”. They say that first you must prove that a correlation exists. Secondly, you must ensure that the change in the independent variable always precedes the effect on the dependent variable. Finally, the causation must still be apparent after the removal of all possible confounding variables. Across all scientific fields, experimentation is known as the best and only way to truly prove causation.

Journalists love to imply causation in the hopes of affecting your dieting regimens. Every time I log onto Facebook, I am barraged with a dozen techniques. “Eat this newly discovered superfruit and cut down your waistline by at least two inches in just two weeks!” or “Stop eating this type of carb and decrease your chances of heart failure!”. The tips are innumerable. But are each of these claims truly sound? Most likely not. As Craig Pease and James Bull point out, we eat a LOT. Our diets have become increasingly varied and each item we eat is made up of tons of different components. It is nearly impossible to remove all confounding variables as there are simply too many. Meat has historically been believed to be bad for your health. While this is often attributed to its fat content, it can still be debated (Pease & Bull, n.d.). Could it be the methods in which the animals are raised? The way in which the meat is prepared? The heaping pile of mashed potatoes you chow down on to accompany your bloody steak? Until we can isolate a single variable, it will be impossible to find out just what is clogging up your arteries.

On the other end of the spectrum, dietary fiber has been seen to correlate with decreased chances for a heart attack. However, it is just as hard to identify what is healthy as it is to discover the unhealthy. Is the big bowl of cheerios that you eat every morning the sole factor in saving your heart? (Pease & Bull, n.d.). Or is it that the type of person who would choose their breakfast based on the fiber content would continue to make similar healthier choices throughout the day? While choosing options such as this will probably not hurt you, they are not your get out of jail free card. Eating fiber will not necessarily give you a working heart. You must live a healthier life overall.

In 2013, the tabloid Gawker published an article entitled “More Buck for your Bang”. They reported that “people who have sex more than four times a week receive a 3.2 percent higher paycheck than those who have sex only once a week” (Rivlin-Nadler, 2013). The science supporting this is that if you include sexual activity as well as many other variables related to health and age into a model that predicted a person’s income, you end up with a “slightly more accurate” (Drydakis, 2013) model. Evelyn Lamb (2013) appears to follow Gawker’s lead with her article title that starts “Sex Makes You Rich?” In fact, she is ironically mimicking her competitor’s articles which give the disclaimer that they are simply “circulated to encourage discussion” (Drydakis, 2013). Lamb’s primary goals in her paper are to refute the statements made in Gawker’s article and teach her readers how to identify similar headlines that attempt to trick consumers into assuming causation. Most readers will see the article and then believe that if only they had more sex, their pockets could be filled to the brim with bills. Clearly, the claims made by Gawker are misleading. As a member of the public, it is important that you identify which articles are informing you and which are trying to intrigue you to purchase it through any means necessary.

Lamb (2013) claims the article to be outrageous. Assuming that the study could prove causation, at the very least, Gawker probably switched the independent and dependent variables. It is much more likely that having more money would allow you to have more sex as opposed to the opposite. A person with more money could be considered attractive because of this money. They also likely have more time for sexual activities if they aren’t working so hard just to house and feed themselves. She also points out the high probability of confounding variables. In the study, they included numerous factors in their regression model. However, they only chose to highlight the one that would get the most attention: sex. It is much more likely that factors such as health and age have a much greater impact on income. Articles similar to Gawker’s are unfortunately too common. For each article you read, carefully analyze any motivations the author may have and how this may affect the work they are presenting.

Problems stemming from an assumption of causality can also be seen in much graver circumstances. Travis Hirschi and Hanan Selvin published an article in 1966 that discusses issues of false causality seen in research attempting to find the cause of delinquency. In the article, Hirschi and Selvin list the factors that most often are found correlating with delinquency: “broken homes, poverty, poor housing, lack of recreational facilities, poor physical health, race, [and] working mothers” (p. 255). However, the 1960 Report to the Congress stated that these factors are not causes. Yet their research to prove non-causality was somewhat flawed. They believed that the factor must be found in every case of delinquency if it is a cause. However, this doesn’t prove that it isn’t a cause but simply that it can’t be the sole cause. In their counter-argument, Hirschi and Selvin argue that this is a false criterion as a confounding variable is the only proof of non-causality.

Either way, society is affected by which case the government chooses to believe. If they choose to follow the Report, then the government may not address the variables identified by Hirschi and Selvin at all and may not begin to set up measures against these factors that may limit the cases of delinquency. On the other hand, if the government chooses Hirschi and Selvin’s side, they may stop research and preventative measures against other possible factors. Additionally, the public may begin to panic about how these factors affect their own life. This could unfortunately lead to stereotyping. People may begin to avoid the impoverished or those of other races or the physically impaired as they now believe those individuals have a higher probability of delinquency. Unfortunately, in cases where there are many contributing factors, establishing causality is a much more complicated process and any incorrect assumptions may have critical consequences.

To not fall prey to false causality in the future, the consumers of scientific findings must work together with the researchers. Scientists must try to ensure that every study they publish follows protocols to ensure the veracity of their claims. Such instructions can be found in an article by MacArthur and colleagues entitled “Guidelines for investigating causality of sequence variants in human disease”. Each idea they establish can be interpreted more broadly to apply to all cases of causality. Their first and most important stipulation is that you must conduct an experiment to conclusively assign causality. You can also use all publically available data, but ensure that the information is accurate. During experimentation, find the probability that your data could be observed randomly (chance of making a Type I error). Next, determine the statistical probability of causality for each variable. Finally, when you publish your findings, ensure that you reveal all information whether it supports or contradicts your point, report the strength of your evidence, and if you only identify a correlation, ensure that a reader will not assume you found causality (MacArthur et al., 2014, p. 471). If all scientists–including those who work for tabloids– follow these steps, then causality is much less likely to be falsely assumed.

Even if the scientists do agree to this, as a member of the public, skepticism is key. While it may be useful to understand when two variables are correlated, ensure that the study proves causality before changing your lifestyle. If you are looking for causation, make sure that the data was found through experimentation and that no confounding variables were forgotten or purposefully overlooked. On that note, attempt to understand any biases the experimenters or reporters may have and factor these into your assumptions. Above all else, while the phrase may be trite, it is important to remember: correlation is not causation.

References

Drydakis, N. (2013). The effect of sexual activity on wages. International Journal of Manpower, 192-215.

Hirschi, T., & Selvin, H. (1966). False Criteria of Causality in Delinquency Research. Social Problems, 13(3), 254-268.

Lamb, E. (2013, August 20). Sex Makes You Rich? Why We Keep Saying. Retrieved September 24, 2015.

MacArthur, D., Manolio, T., Dimmock, D., Rehm, H., Shendure, J., Abecasis, G., . . . Gunter, C. (2014). Guidelines for investigating causality of sequence variants in human disease. Nature, 508, 469-476.

Pease, C., & Bull, J. (n.d.). Correlations are hard to interpret. Retrieved September 29, 2015.

PreMBA Analytical Methods. (n.d.). Retrieved September 30, 2015.

Report to the Congress, Doc., at 21 (1960).

Rivlin-Nadler, M. (2013, August 17). More Buck For Your Bang: People Who Have More Sex Make The Most Money. Retrieved October 27, 2015.

Sandler, B. (1948, August 5). Diet Prevents Polio. The Asheville Citizen.

Vigen, T. (2015, May 12). 15 Insane Things That Correlate With Each Other. Retrieved September 29, 2015.