Background

Publication Bias, or what is now coined the file-drawer effect problem, stems from the scientists’ tendency to ‘throw away’ or not publish all studies that either disprove their hypothesis or are “irrelevant”. The general reader and other scientists are not aware of these lost studies. Scientists are thus at a deficit because they don’t know about studies that had to be ran for the hypothesis to be proven and readers are at a deficit because there could have been many other factors that affected the result that they don’t know about. The audiences are only getting a small dose of the entire experimental procedure. Although the omission of information differs from lying overtly, it still paints a picture that is not entirely truthful of the experiment and result. The file drawer problem is the start to a slippery slope to research fraud because it is, in reality, pushing results to serve a personal agenda. People accept that products are “scientifically proven” and hold weight to that notion; however that may not mean as much as they think as suggested by publication bias. Retractions are not frequently published which would tell prescribers and researchers that certain products are now unusable. A drug company researcher analyzed 53 papers in top journals and found that he couldn’t replicate 47 of them. This proves that there are too many omissions in publications. Since the public isn’t privy to information they cant access, this problem is not represented to them; thus unearthing the crux of the problem in publication bias.

Publication bias can be understood as compared to an iceberg; the studies that are published are not indicative of all components of the research and there is a ton of data that is never seen.

Complication

The scientific community sees publication bias as “the systematic error induced in a statistical inference by conditioning on the achievement of publication status. Clearly, such a bias can only be present if the inference drawn in a study influences the publication decision, then no study can produce a biased inference, when viewed from the perspective of an audience or journal” (419, Begg & Berlin ). This particular definition places a large amount of blame on the publisher, since without publishing pressure, there wouldn’t seem to be any motivation to omit negative results. It is proven that journals are more likely to publish positive results: “Journals are more likely to publish studies with conclusive and statistical significance. Articles drawing a positive conclusion regarding the association of interest are likely to be more interest to readers than articles which refute associations” (424. Begg & Berlin).

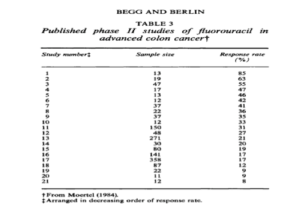

Unfortunately, it is relatively easy to manipulate studies to favor positive results. One tactic used by researches and companies is to sample small groups of people as opposed to a large range (Figure 1). As shown in Figure 1 , the smaller size of people tested corresponds to a higher response rate. Therefore, a study can claim that 85% of people reacted positively to a drug, when in reality they only tested 13 people.

The complications of this issue are multifaceted. Publication bias can, at times, be an unspoken evil in the scientific realm. Many studies whose results are omitted from reports are those that are funded by companies who need certain results to thrive economically: “Withholding of clinical trial data that would be financially harmful to a company seems to be commonplace. It has added to publication bias in certain fields of clinical medicine”(67, Wagner). Therefore, researchers that sign onto such studies are either sworn to secrecy or are on board with publishing incomplete works. Unfortunately, a root cause of publication bias is money. This causes a large portion of the scientific community to turn a blind eye to publication bias. Those in the scientific community that are not directly involved, seek to eradicate publication bias and practice ‘healthy’ science.

Secondly, an issue with publication bias is that it can be dangerous. Drugs that say they are safe, but in reality were harmful to a select group of people can be presented as if there is no risk: “unpublished studies raise questions of concerns regarding both underreported risks and underreported limitations in efficacy” (Ghamei). Also, it is to the detriment of science in general. If there are experiments that are not published, then other scientists are unaware of certain results or experiments that may have already be done. A negative study for one scientist could be the missing link to another.

Thirdly, publication bias spoils data for others—creating a particular problem in the world of meta-analysis. Meta-analysis, a statistical technique for combining the findings from independent studies, is the vehicle through which the public can receive a synthesis of a wide range of data. However, if a majority, or even some of that data, was presented as if there were no negative results, the overall conclusion can be erroneous.

Lastly, to expand on the issue with scientists not having access to these parts of other researcher’s works, studies that did not publish negative results cannot be replicated. In a recent study, out of 100 prominent papers, only 39% could be replicated. This will not be beneficial to science in the long run because scientists are unable to work collaboratively off of other studies if they are incomplete.

Examples

A pharmacologist at University of California, Betty Dong, signed a contract with Flint Laboratories to conduct a clinical trial comparing the company’s thyroid drug against a generic competitor. This contract stated that “data obtained by the investigation while carrying out this study is also considered confidential and is not to be published or otherwise released without written consent from Flint Laboratories” (77, Wagner), to which she agreed. However, soon after, a new company called Knoll Pharmaceuticals took over the rights to the drug and threatened Dong with legal implications if she weren’t to remove her published findings. The company also claimed that her results were in error and discredited her experiments. She withdrew her paper from the journal and now that study cannot be accessed. In this case, the publication bias came after the paper was published, but it carries the same idea in that a study, or part of one, was removed from the full publication to favor a positive result. This example also shows the power of the private company in the scientific sphere.

Another example of publication bias is when Attorney General Spitzer of New York charged a pharmaceutical company, GlaxoSmithKline, with concealing information about the safety and effectiveness of an antidepressant. GSK conducted at least 5 studies with children and adolescents as subjects but they suppressed negative studies showing that the drug increased risk of suicide in that age bracket and was not effective. Once brought to trial the company admitted that “It would be commercially unacceptable to include a statement that efficacy had not been demonstrated, as this would undermine the profile of paroxetine (the drug in question)” (67, Wagner ).

Solutions/Suggestions

Although certain aspects of the file drawer effect are not easily fixable, like the role of private companies in scientific trials, there are ways to make it less of a problem. For example, the creation of journals that focus on these rejected studies and work to create a full picture of the experiment have been made such as the All Results Journal the Journal of Negative Results commit themselves to unearthing lost studies. Secondly, adding more regulations to scientists so that they must publish all information that is deemed pertinent to the study would be beneficial . If companies and researches knew the penalties of omitting parts of their experiments, then it could reduce the number that chose to do so. Thirdly, if researchers didn’t think their reputations were at risk if they produced negative studies, then perhaps they wouldn’t stand for the omission of negative studies. A ways to do this is to make the scientific community and funders more understanding about negative studies and their implications—education is the key. Lastly, the scientific communities need to educate the general public and advertising companies on the importance of researching the depth of studies. If advertising companies refuse to advertise a drug that isn’t backed up by numerous studies for all demographics, then there would be no financial incentive for companies to put out drugs that aren’t ready. Unfortunately, it is said that publication bias still reigns despite new legal efforts to deter it. However, the scientific community is optimistic that with time and education, publication bias will decrease.

References

Begg, C and Berlin, J. Publication Bias: A problem of interpreting medical data. Journal of the Royal Statistical Society. Series A (Statistics in Society) Vol. 151, No. 3 (1988), pp. 419-463

Dewey, R. Selective Reporting. http://www.intropsych.com/ch04_senses/selective_reporting.html

Ghamei, N. Publication Bias and the Pharmaceutical Industry: The Case of Lamotrigine in Bipolar Disorder. The Medscape Journal. 2008. http://www.ncbi.nlm.nih.gov/pmc/articles/PMC2580079/

Dolgin, E. Publication bias continues despite clinical-trial registration. Nature: international weekly journal of science. 9/11/11. http://www.nature.com/news/2009/090911/full/news.2009.902.html

Bohannon, John. Science Mag. AAS Publisher. 8/28/2015 http://www.sciencemag.org/content/349/6251/910.full.pdf?sid=71d25b2a-334e-404d-83e0-44803b6050fb

Edrup, N. Positive publication bias in cancer screening trials. Science Nordic. 10/10/13. http://sciencenordic.com/positive-publication-bias-cancer-screening-trials

Olsen, B. Journal of negative results in Biomedicine. http://www.jnrbm.com

Peplow, M. Social sciences suffer from severe publication bias. Nature . 8/28.2014. http://www.nature.com/news/social-sciences-suffer-from-severe-publication-bias-1.15787

Butler P. Publication bias in clinical trials due to statistical significance or direction of trial results: RHL commentary (last revised: 1 July 2009). The WHO Reproductive Health Library; Geneva: World Health Organization.

All Results Journal. http://www.arjournals.com/ojs/