The first electronic calculator was introduced in 1963 by a British firm called the Bell Punch Company. Made out of discrete transistors, it was about the size of a cash register. Four years later, Texas Instruments came out with a slightly smaller IC calculator, and other companies followed suit. These gadgets were composed of logic ICs and two types of memory chips: RAMs, for storing numbers entered by the user and calculated by the machine; and read only memories (ROMs), for holding the device’s internal operating instructions, such as the procedure for finding square roots. (RAM is like a scratchpad, ROM like a reference book.) Although the first IC calculators were quite limited and cost hundreds of dollars, they led to the development of cheap pocket versions in the early 1970s – and the slide rule, that utilitarian holdover from the early seventeenth century, became extinct.

In the summer of 1969, Busicom, a now-defunct Japanese calculator manufacturer, asked Intel to develop a set of chips for a new line of programmable electronic calculators. (The IC’s extraordinary capabilities were leading to a blurring of the line between calculators and computers.) Busicom’s engineers had worked up a preliminary design that called for twelve logic and memory chips, with three to five thousand transistors each. By varying the ROMs, Busicom planned to offer calculators with different capabilities and options. One model, for example, contained a built-in printer. The company’s plans were quite ambitious; at the time, most calculators contained six chips of six hundred to a thousand transistors each. But Intel had recently developed a technique for making two-thousand-transistor chips, and Busicom hoped that the firm could make even more sophisticated ICs.

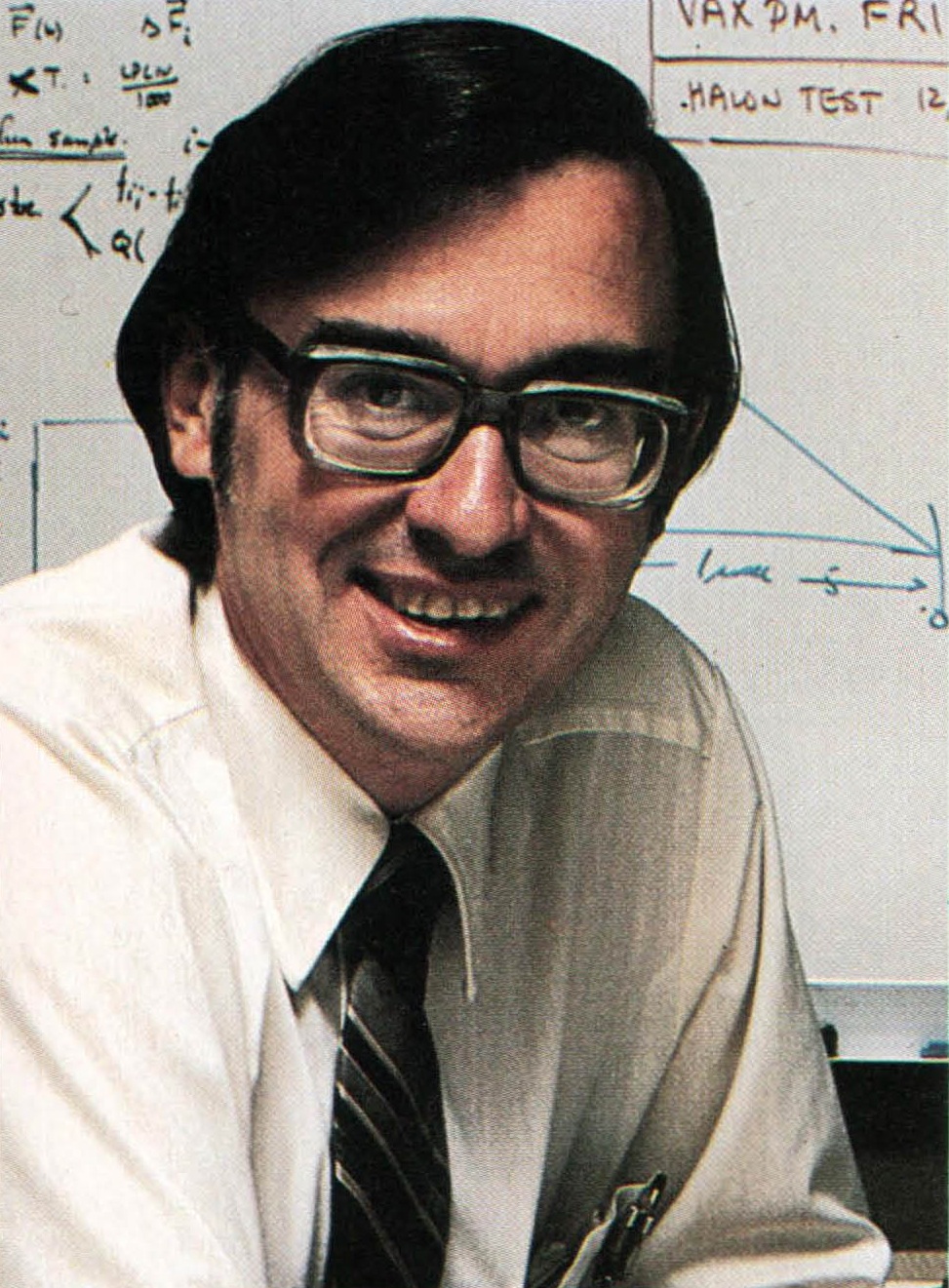

Intel assigned the Busicom job to Marcian E. Hoff, a thirty-two- year-old engineer with a B.S. in engineering from Rensselaer Polytechnic Institute in Troy, New York, and a doctorate from Stanford. A natural engineer, with the thoughtful manner of a professor and the caution of a corporate executive, Hoff – Ted to his friends – had a knack for spotting new solutions to technical problems. “When my washing machine breaks,” said one of Hoff’s colleagues and admirers, “I call the Sears repairman. When a clever person’s machine breaks, he goes down to Sears, buys a new part, and installs it himself. But if Ted’s machine breaks, he analyzes the problem, redesigns the faulty part, casts it in his own crucible, polishes it on his lathe, and installs it himself – and the machine works better than ever.”

Hoff studied Busicom’s design and concluded that it was much too complicated to be cost effective. Each calculator in the line needed one set of logic chips to perform basic mathematical functions and another to control the printers and other peripheral devices. (A logic chip can only carry out a fixed series of operations, determined by the pattern of its logic gates. A logic chip that has been designed to control a printer usually can’t do anything else.) Even though some of the calculator’s functions would be controlled by ROM, which sent instructions to the logic chips and, therefore, enabled some of them to do slightly different tasks, the bulk of the operations would be performed by the logic ICs. Although Intel could have produced chips of the required complexity, the productive yield – the number of working chips – would have been prohibitively low.

Busicom was looking forward and backward at the same time. The first logic chips, produced in the early 1960s, contained only a handful of components. As the state of IC technology advanced, the devices became more complex and powerful, but they also became more difficult and expensive to design. Since a different set of logic chips was required for every device, the chips destined for one gadget couldn’t be used in another. The IC companies developed various techniques to streamline the design process – using computers to help layout the chips, for example – but the results were disappointing. An engineering bottleneck was developing; unless a simpler way of designing the chips was perfected, the IC industry wouldn’t be able to keep up with the burgeoning demand for its components, no matter how many engineers it employed.

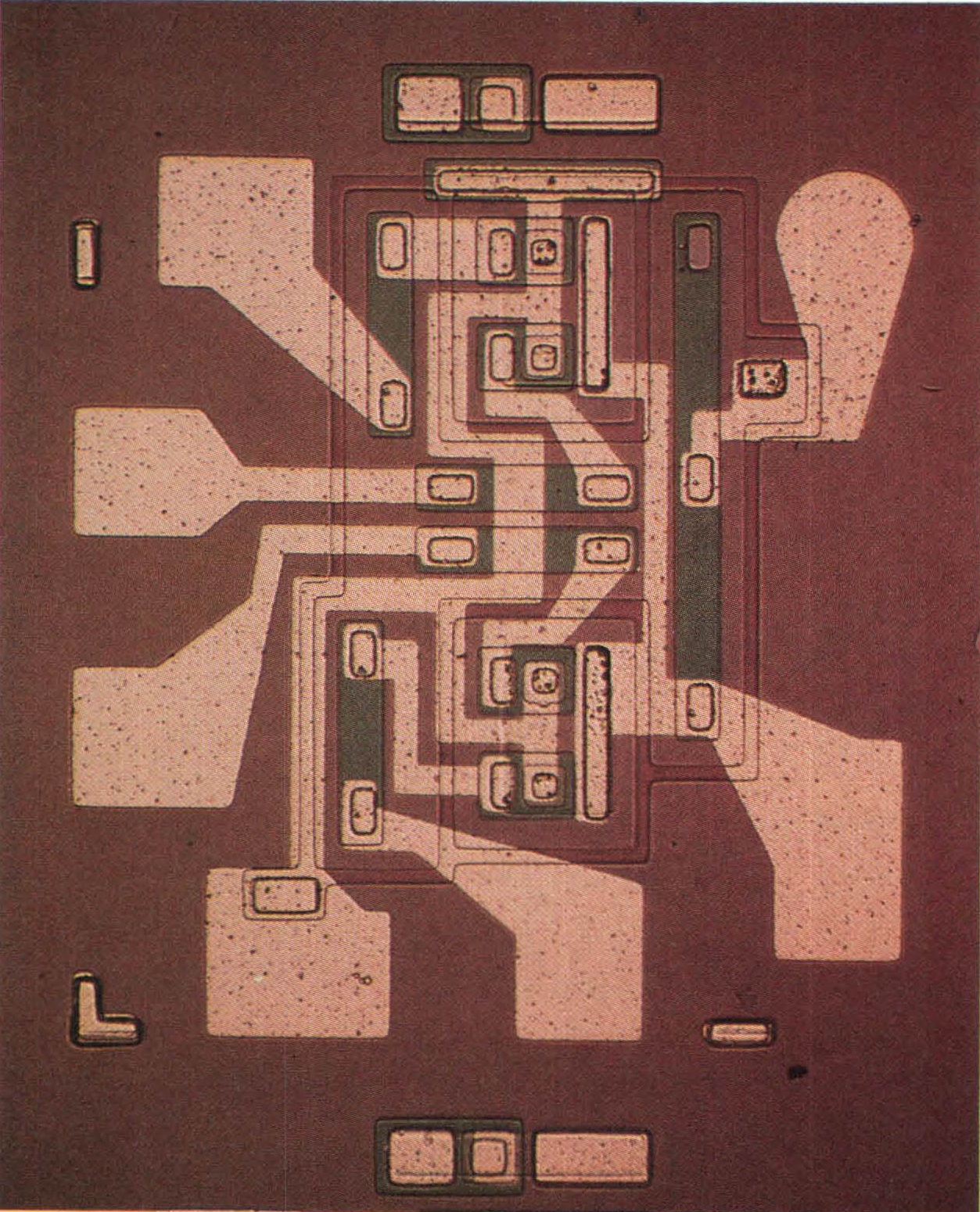

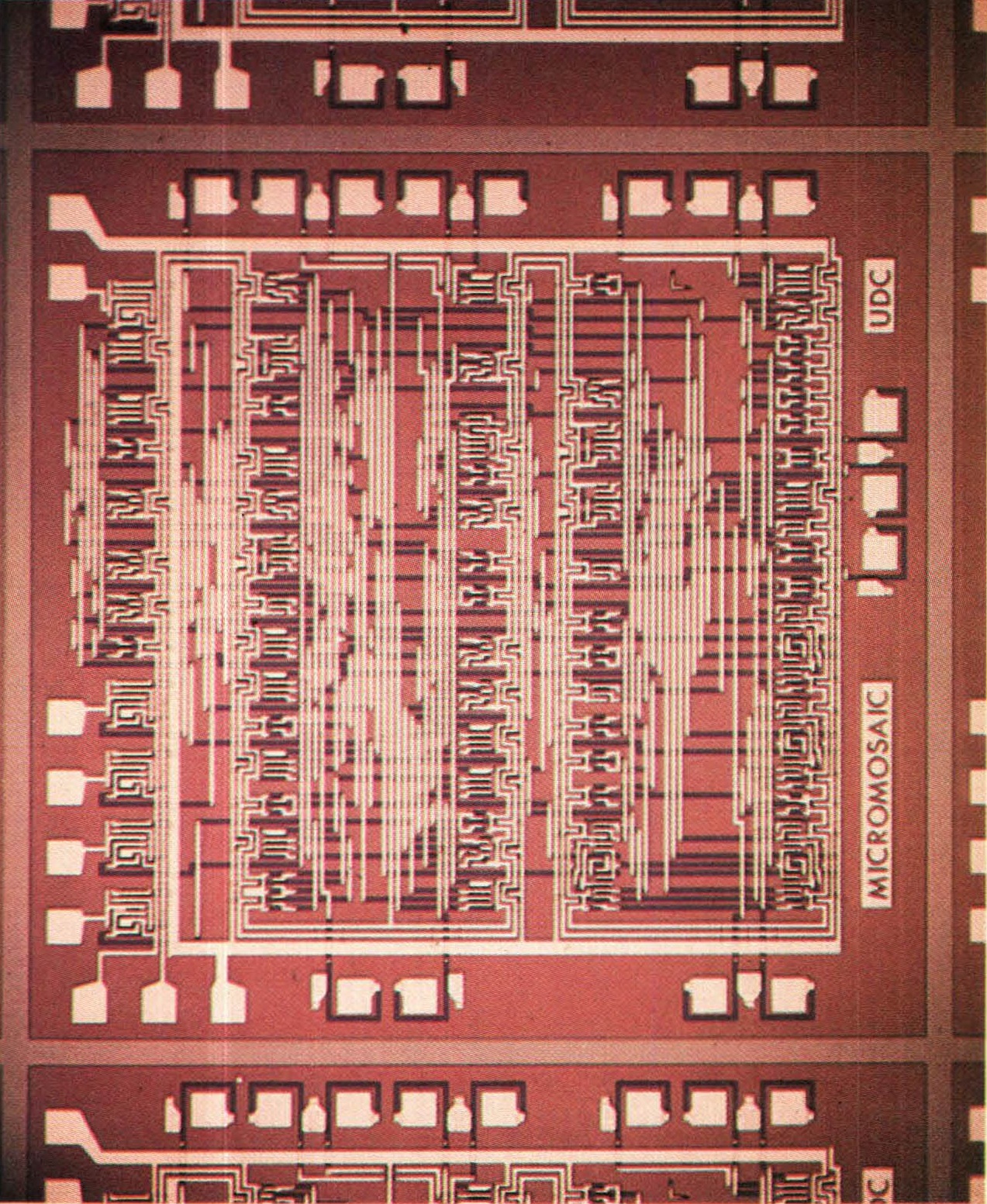

Fortunately, Hoff came up with a solution. Why not, he suggested, develop a general-purpose logic chip, one that could, like the central processor of a computer, perform any logical task? Like a conventional central processor, the microprocessor would be programmable, taking its instructions from RAM and ROM. So if a customer (like Busicom) wanted to make a calculator, it would write a calculator program, and Intel would insert that program into ROM. Each calculator would need one microprocessor and one programmed ROM, along with several other chips (depending on the complexity of the device). Similarly, if another customer came along with plans for a digital clock, it would devise a clock program, and Intel would produce the requisite ROMs. This meant that Intel wouldn’t have to work up a new set of logic chips for every customer; the burden of design would be shifted to the customer and transformed into the much less costly and time-consuming matter of programming.

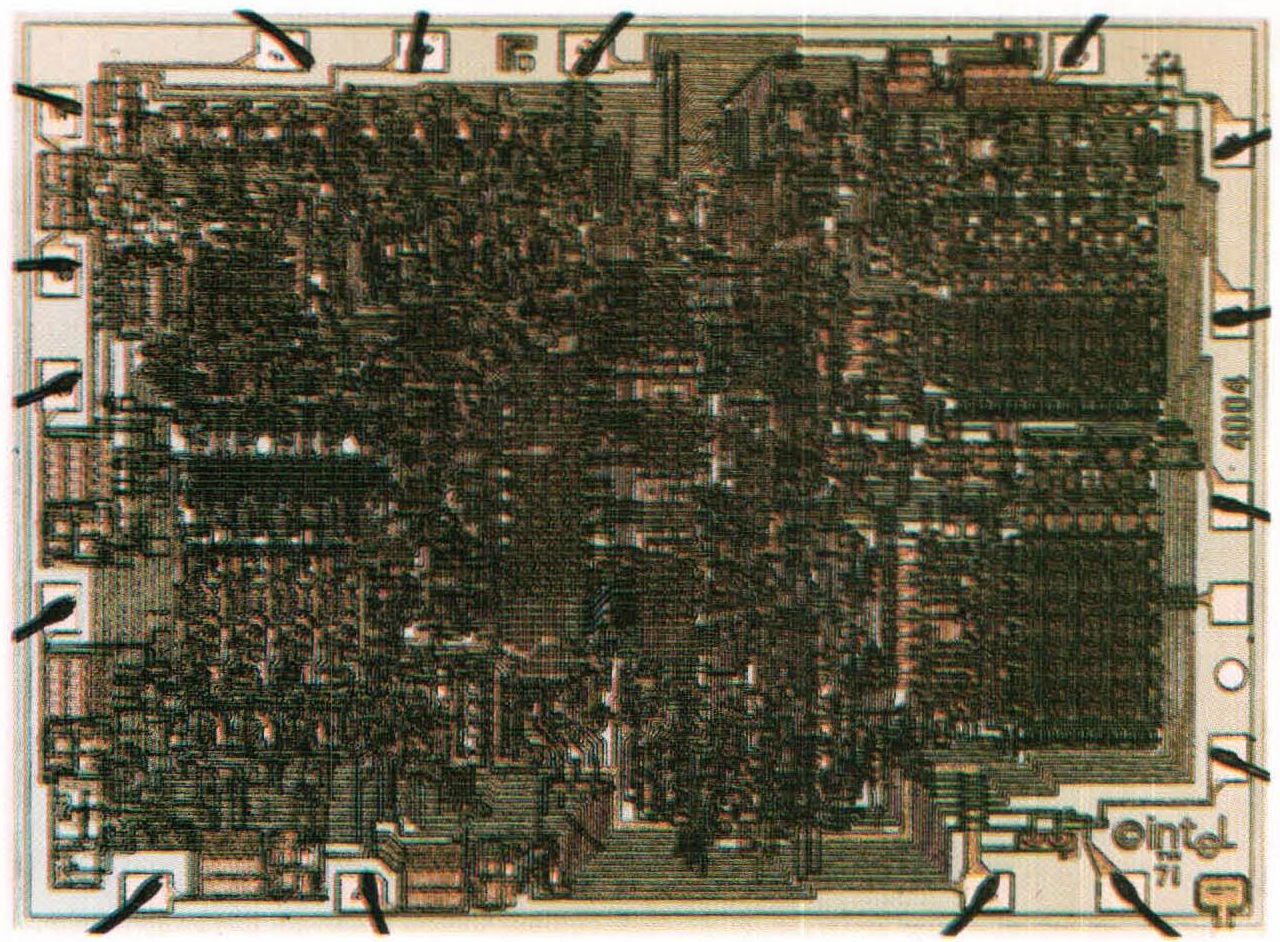

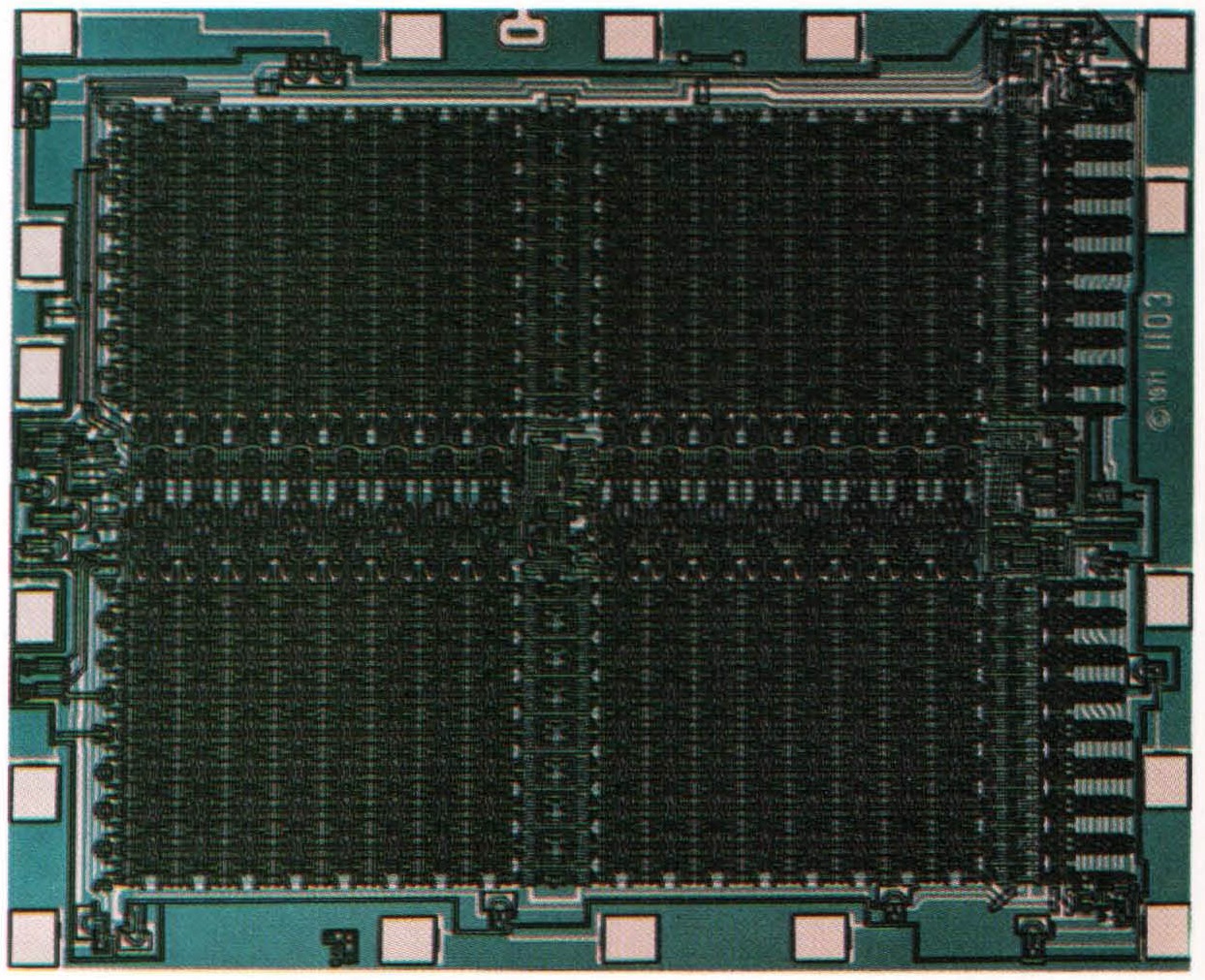

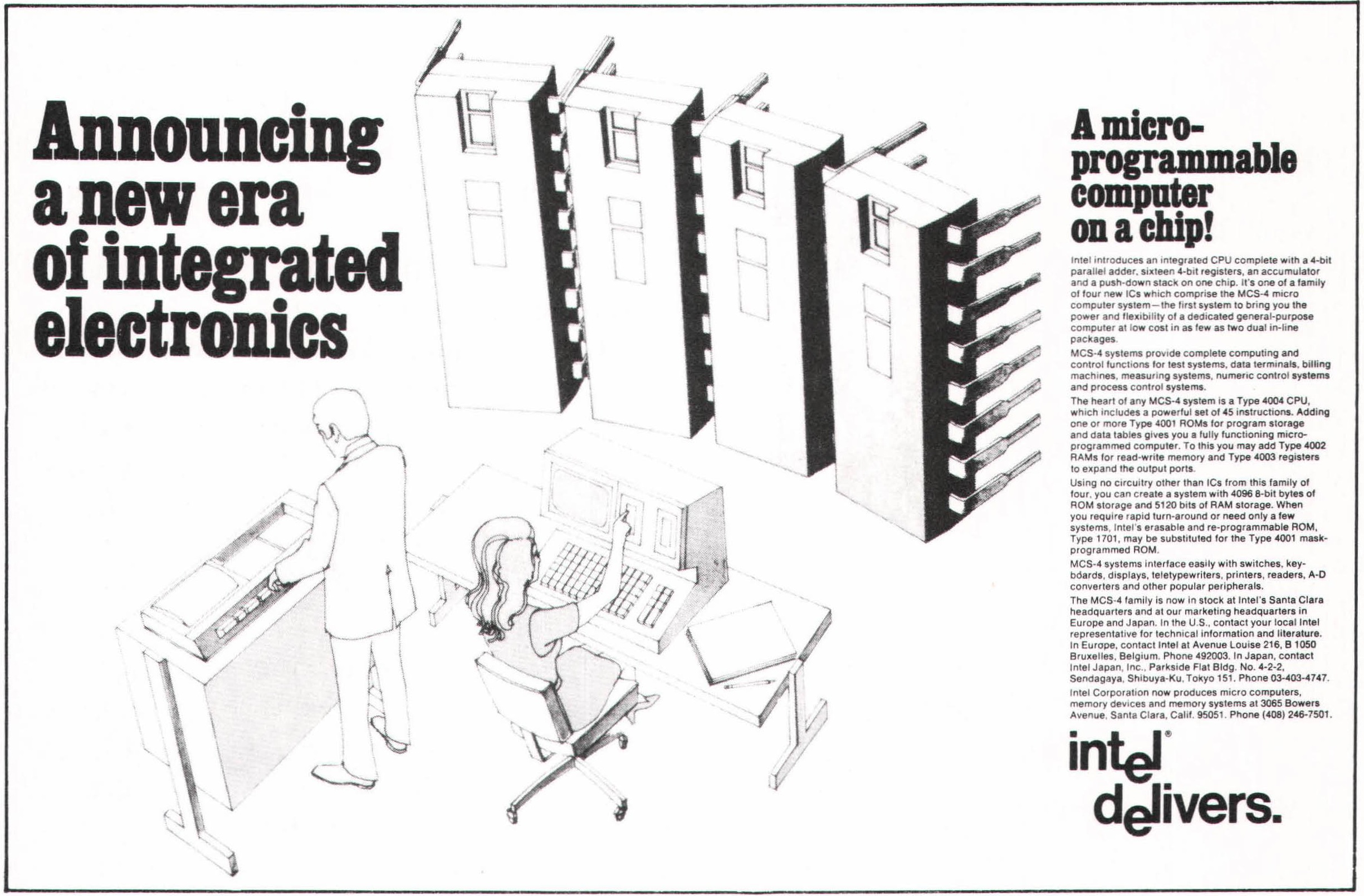

It was a brilliant idea. Instead of twelve chips, Busicom’s calculators now needed only four – a microprocessor, a ROM, a RAM, and an input/output IC to relay signals between the microprocessor and the outside world. Not only did Hoff’s invention cut down the number of chips and, therefore, the number of interconnections (thereby increasing the calculators’ reliability), it also resulted in a much more flexible and powerful family of calculators. Literally a programmable processor on a chip, a microprocessor expands a device’s capabilities at the same time as it cuts its manufacturing costs. In other words, it’s one of those rare innovations that gives more for less. Busicom accepted Hoff’s scheme, and the first microprocessor, designated the 4004, rolled off Intel’s production line in late 1970.

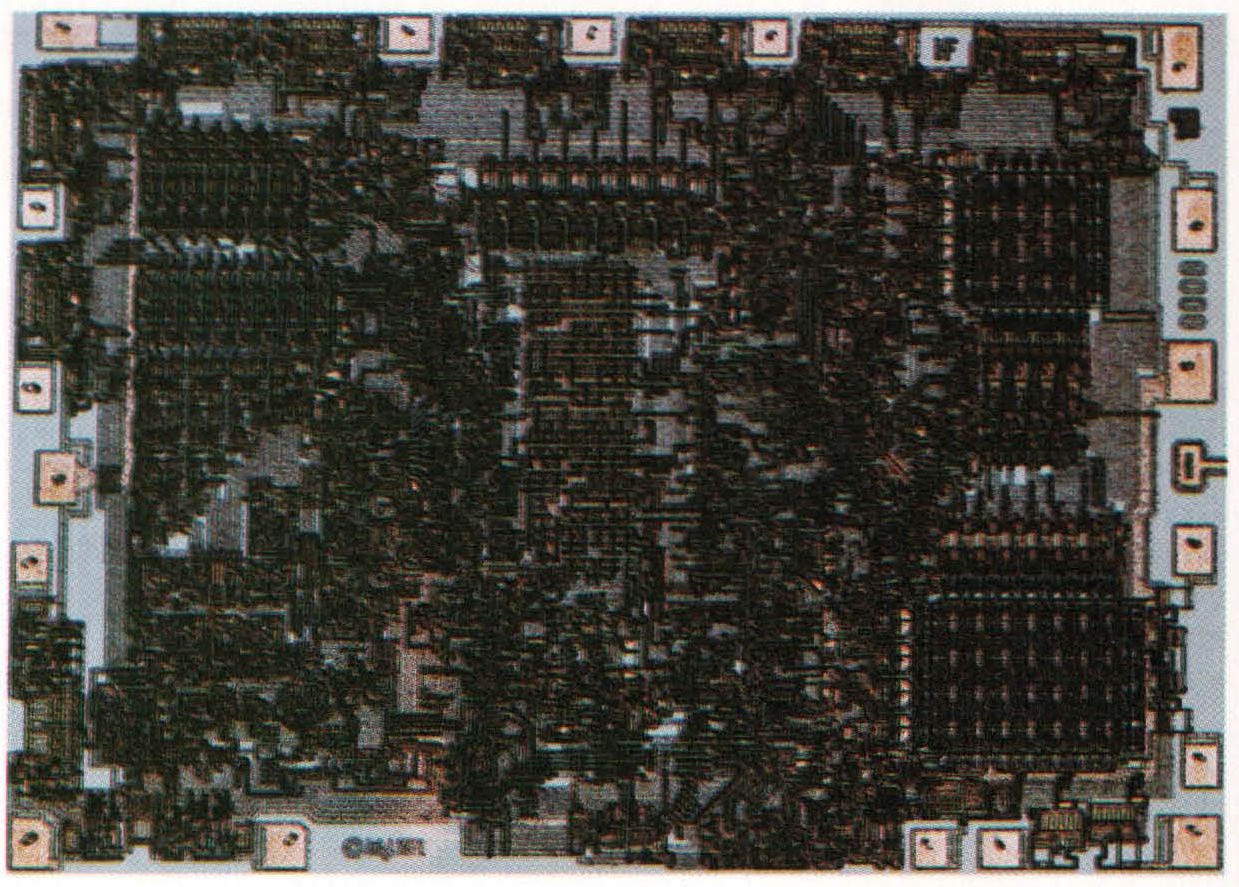

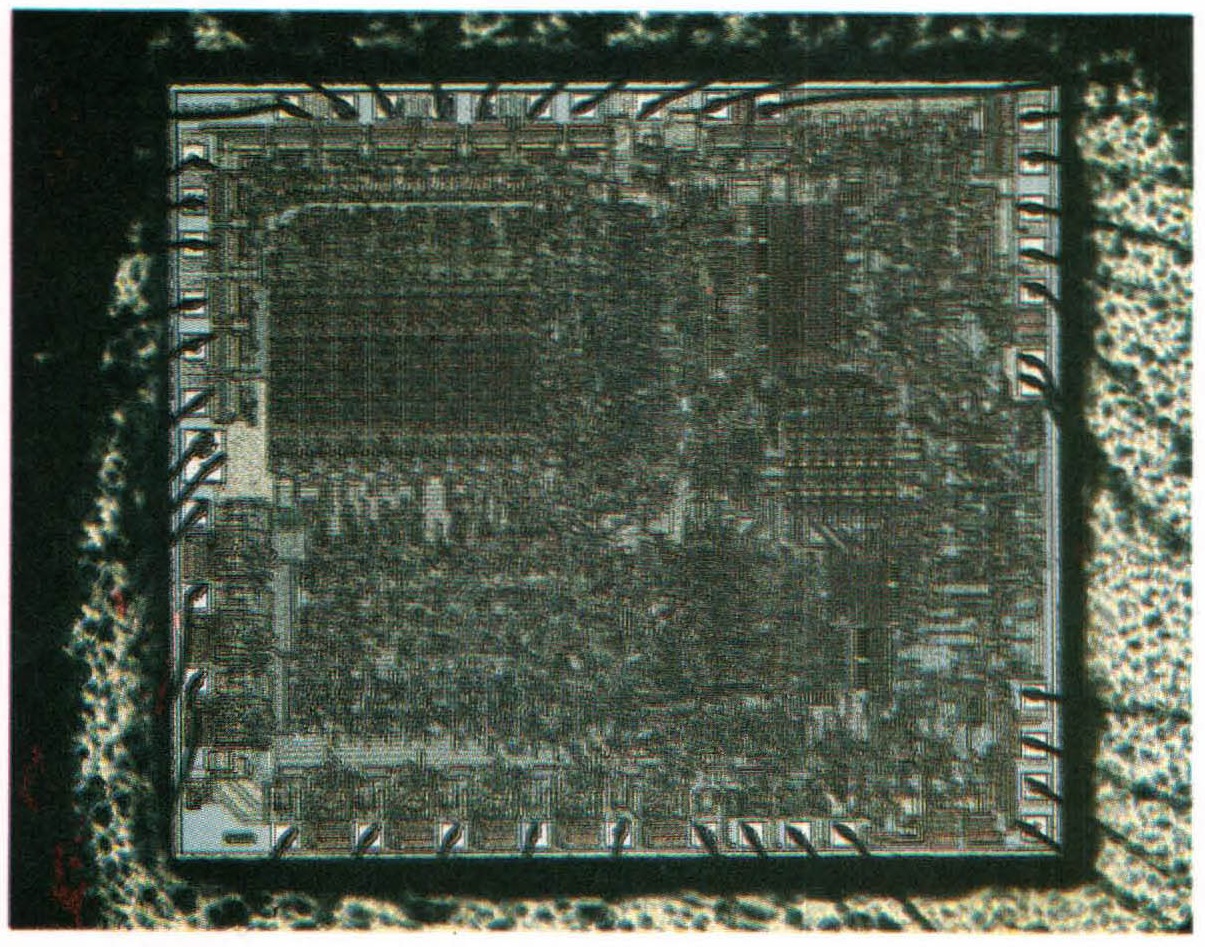

The 4004 wasn’t a very potent computational tool. With 2,250 transistors, it could process only four bits of data at a time and carry out about 60,000 operations a second. It wasn’t powerful enough to serve as the central processor of a minicomputer, but it was quite adequate for a calculator and other relatively simple electronic devices, like taximeters or cash registers. The other three chips in the set were also limited. The ROM, which contained the inner program that governed the calculator, stored 2K bits of data, and the RAM, which provided temporary storage, held a mere 320 bits. Nevertheless, the four chips constituted a bona fide computer that, mounted on a small circuit board, occupied about as much space as a pocketbook.

Because Intel had developed the 4004 under contract for Busicom, the Japanese company had an exclusive right to the chip and Intel couldn’t offer it on the open market. But in the summer of 1971, Busicom asked Intel to cut its prices – the calculator business had become quite competitive – and, in exchange for the price reduction, Intel won the right to market the 4004. Even so, the company hesitated. No one had fully grasped the enormous utility of Hoff’s invention, and Intel assumed that the chip would be used chiefly in calculators and minicomputers. About 20,000 minicomputers were sold in 1971; at best, the 4004 (and other, more sophisticated microprocessors Intel was considering developing) would wind up in 10 percent of these machines – a prospect that wasn’t very interesting to a small semiconductor company bent on becoming a big one.

Although Intel didn’t realize it at first, the company was sitting on the device that would become the universal motor of electronics, a miniature analytical engine that could take the place of gears and axles and other forms of mechanical control. It could be placed inexpensively and unobtrusively in all sorts of devices – a washing machine, a gas pump, a butcher’s scale, a jukebox, a typewriter, a doorbell, a thermostat, even, if there was a reason, a rock. Almost any machine that manipulated information or controlled a process could benefit from a microprocessor. Fortunately, Intel did have an inkling, just an inkling, of the microprocessor’s potential. The company decided to take a chance, and the 4004 and its related chips were introduced to the public in November 1971.

Not surprisingly, the 4004 sold slowly at first, but orders picked up as engineers gained a clearer understanding of the chip’s near-magical electronic properties. Meanwhile, Intel went to work on more sophisticated versions. In April 1972, it introduced the first eight-bit microprocessor, the 8008, which was powerful enough to run a minicomputer (and, as we shall see, at least one inventor used it for just that purpose). The 8008 had many technical drawbacks, however, and it was superseded two years later by a much more efficient and powerful microprocessor, the now-legendary 8080. The 8080 made dozens of new products possible – including the personal computer. For several years, Intel was the only microprocessor maker in the world, and its sales soared. By the end of 1983, Intel was one of the largest IC companies in the country, with 21,500 employees and $1.1 billion in sales.