All the early computers – ENIAC, the IAS machine, Manchester University’s Mark I, the IBM 701, and Whirlwind – were maddeningly difficult to program. In general, the first computers were programmed either in machine code, which consisted of binary numbers, or in codes known as assembly languages, which were composed of letters, numbers, symbols, and short words, such as “add.” After being fed into the computer, assembly programs written in these languages were translated automatically into machine code by internal programs called assemblers, and the resulting machine code, punched out on cards or tape, was then re-entered into the computer by the operator. As computer technology advanced, high-level programming languages that used ordinary English phrases and mathematical expressions were developed. Because computers are only as useful as their programs, the computer industry – particularly IBM and Remington Rand – put a great deal of effort into the development of efficient and economical programming techniques.

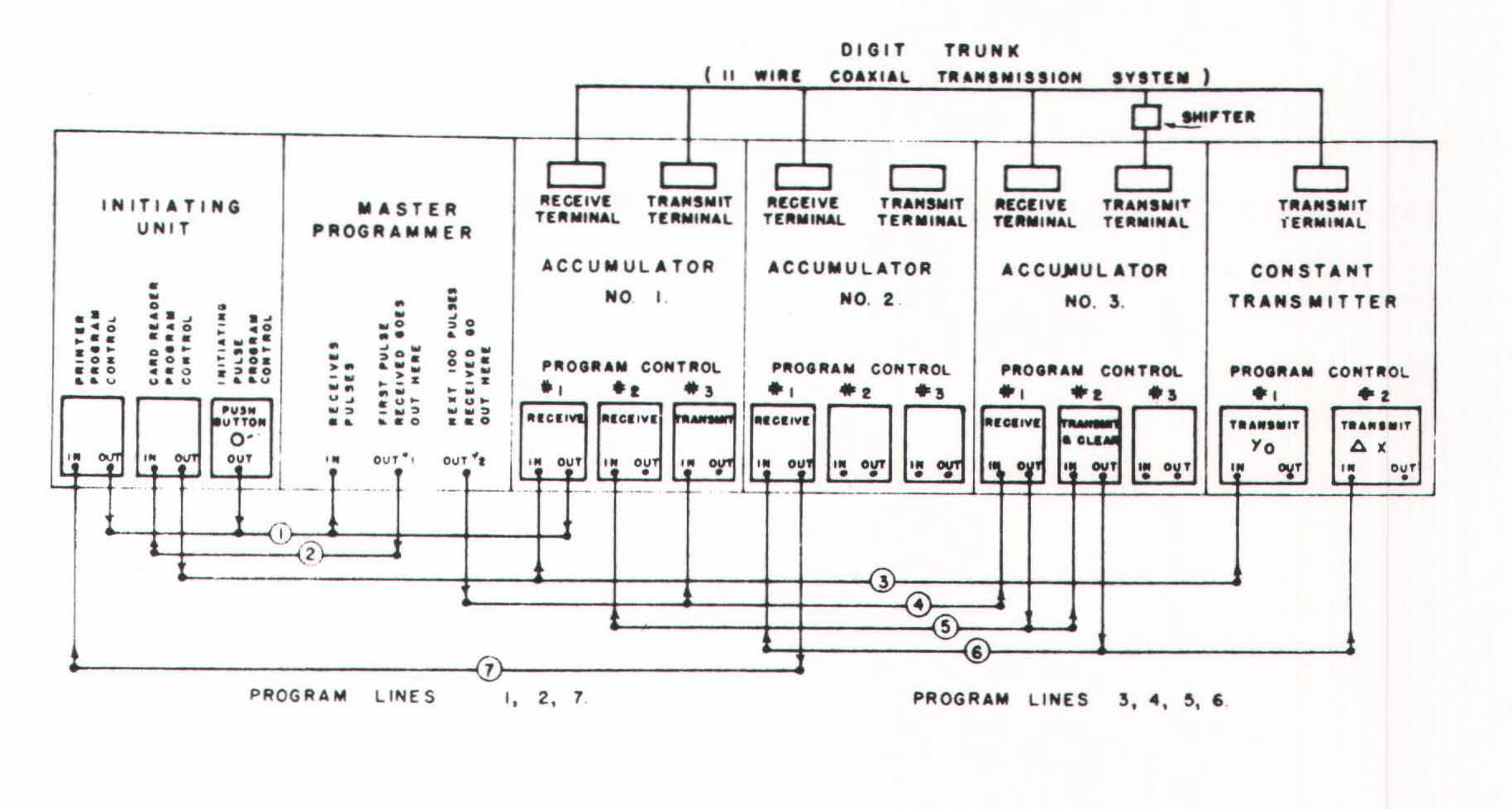

An ENIAC program was really a wiring diagram. It showed exactly how the machine’s switches and plug boards ought to be set to solve a given problem – not at all what we’d consider a program today. But ENIAC was quite unlike its stored-program descendants. For example, it possessed two kinds of circuits: numerical circuits, which relayed the electrical pulses that represented numbers, and programming circuits, which coordinated the sequences of operations that implemented a program. As a result, an ENIAC program consisted of two parts, one dealing with the numerical circuits, the other with the programming ones. Every single operation had to be provided for, and it took two or three days to set up ENIAC for a complicated problem. Programmers had to know the machine inside out, right down to the smallest circuits.

When ENIAC was built, there were no formal, standardized, conceptual procedures for solving problems by computer. So the ENIAC team set about inventing one. Herman Goldstine, the mathematician and Army lieutenant who brought Mauchly’s idea for an electronic calculator to the attention of the Ordnance Department, thought that a pictorial representation of a computer’s operation would be the best aid to clear thinking and careful planning. With von Neumann’s help, he developed flow charting. Although flow charts have since fallen out of favor as a programming tool, many programmers still use them.

By the late 1940s, ENIAC had been superseded by stored-program computers, which meant that instead of fiddling with a computer’s wiring, you now could feed instructions directly into the machines through punch card readers, magnetic-tape decks, or other means. For example, the first stored program run on the Mark I consisted of binary numbers entered via a keyboard, with each key corresponding to a coordinate, or address, in the memory. A list of instructions for finding the highest factor of a number, it contained seventeen lines of sixteen bits each. The program itself has been lost, but a line in it might have looked like this: 1001000010001001. The last three digits specified the operation (addition, subtraction, and so on), while the first thirteen signified the address either of another instruction or of a piece of data.

Although this programming method was a marked improvement over ENIAC’s, it nevertheless left much to be desired. The binary system is ideal for machines but awkward for people. Therefore, Alan Turing, the Mark I’s chief programmer, developed an assembly language (or symbolic language as it was also called at the time) that substituted letters and symbols for 0s and 1s. For example, a “/W” ordered the Mark I to generate a random number; a “/V,” to come to a stop. The language’s instruction set, or repertoire of commands, contained fifty items, sufficient to perform al- most any operation. The system was really a mnemonic code – and a clumsy one at that – whose symbols, entered via a teleprinter, were automatically translated into binary math by the computer. There was nothing complicated about the translation process; every letter and symbol was turned into a predetermined binary number, and then executed.

By the way, the Mark I’s random number generator, which was installed at Turing’s suggestion and ran off a source of electronic noise, supplied some fun and games. F. C. Williams, who headed the Mark I project and who invented electrostatic storage tubes, wrote a little gambling program that counted the number of times a given digit, from 0 to 9, was produced by a run of the generator. But Williams adjusted the generator to lean toward his favorite number, and he enjoyed betting against unsuspecting visitors. The beginnings of computer crime!

UNIVAC was programmed with a simpler and more advanced language than the Mark 1. In 1949, at Mauchly’s suggestion, an alphanumeric instruction set was devised for BINAC and adapted, in improved form, for UNIVAC. This instruction set was called Short Code, and it enabled algebraic equations to be written in terms that bore a one-to-one correspondence to the original equations. For example, a = b + c was represented as S0 03 S1 07 S2 in Short Code, with S0, S1, and S2 standing for a, b, and c; 03 meaning “equal to”; and 07 signifying “add.” Inside UNIVAC, an interpreter program automatically scanned the code and executed each line one at a time. (That is, it did not produce cards or tape that had to be reentered into the machine; it carried out the program automatically.) Although Remington Rand made many extravagant claims for Short Code, you still had to supply detailed instructions for every operation.

In the early 1950s, most computers were used for scientific and engineering calculations. These calculations involved very large numbers, and the usual way of writing a number – groups of figures separated by a comma – was inconvenient. Instead, programmers used the floating-point system of numerical notation, which reduced big numbers to a more manageable size. (A floating-point number is a fraction multiplied by a power of two, ten, or any figure. For example, 2,500 is .25 x 104; 250,000 is .25 x 106; and 25,000,000 is .25 x 108.) But the computers of the period couldn’t perform floating-point operations automatically. Nor could they automatically assign memory addresses, a task known as indexing, or handle input and output. As a result, programmers had to spend a lot of time writing subroutines, or segments of programs, telling the machines exactly how to perform these operations. And computers spent most of their time carrying out such subroutines.

The more forward-looking programmers began tinkering with the idea of automating programming (and they’re still working on it). If indexing, floating-point, and input/output operations could be performed automatically by computers, then the more creative aspects of programming – the things that only people could do – would be left to the programmers, and the machines would be freed from many time-consuming chores. Of course, all this was easier said than done. You needed an internal program that was smart enough and fast enough to translate a programmer’s instructions into efficient machine code. In other words, wrote John Backus and Harlan Herrick, two IBM programmers who, as we shall see, managed to develop just such an internal program: “Can a machine translate a sufficiently rich mathematical language into a sufficiently economical program at a sufficiently low cost to make the whole affair feasible?”

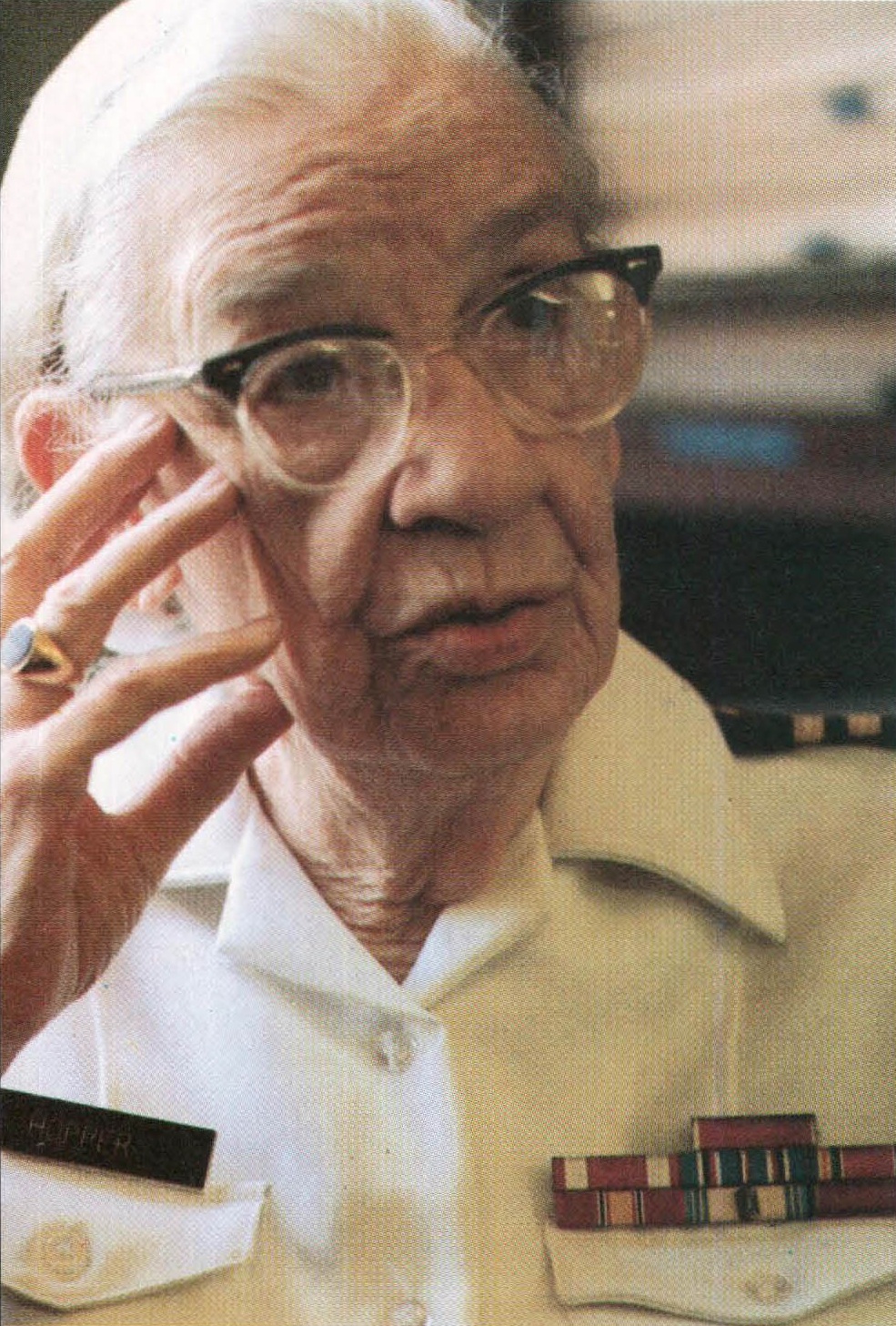

In 1951, Grace Murray Hopper, a mathematician at Remington Rand, conceived of a new type of internal program that could perform floating-point operations and other tasks automatically. The program was called a compiler, and it was designed to scan a programmer’s instructions and produce, or compile, a roster of binary instructions that carried out the user’s commands. Unlike an interpreter, a compiler generated an organized program, then carried it out. Moreover, a compiler had the ability to understand ordinary words and phrases and mathematical expressions. Hopper and Remington Rand devised a compiler and associated high-level language that had some success. Although “automatic programming” helped the firm sell computers, it wasn’t all that it was cracked up to be. (“Automatic programming, tried and tested since 1950, eliminates communication with the computer in special code or language,” declared a UNIVAC news release in 1955.)

But Hopper was an excellent proselytizer, and her techniques spread. In 1953, two MIT scientists, J. Halcombe Laning and Niel Zierler, invented one of the first truly practical compilers and high-level languages. Developed for Whirlwind, it used ordinary words, such as “PRINT” and “STOP,” as well as equations in their natural form, like a + b = c. In addition, it performed most housekeeping operations, such as floating-point, automatically. Unfortunately, the compiler was terribly inefficient. Although Laning and Zierler’s language was easy to learn and use, the compiler required so much time to translate the programmer’s instructions into binary numbers that Whirlwind was slowed to a crawl. “This was in the days when machine time was king,” Laning recalled, “and people time was worthless.” As a result, their system, though highly influential, was rarely used.

When IBM began taking orders for the scientific 704, in May 1954, they introduced it as the first of a new class of computers whose circuits could perform floating-point and indexing operations automatically. A 704 programmer wouldn’t have to compose floating-point subroutines for every scientific or engineering calculation, and the computer wouldn’t have to waste costly time wandering down the sticky byways of floating-point operations. At last, the two most time-consuming programming chores would be eliminated. But other programming inefficiencies now stuck out like boulders on a plain, unable to hide in the shade of floating-point and indexing subroutines. The 704 cried out for a compiler that could translate simple high-level instructions into machine codes that were just as good as those written by a programmer.