Commercial development of the IC followed rapidly, with all companies concerned freely licensing their patents. In 1961, Fairchild and TI (using the planar process) introduced their first chips; TI’s offering, for example, consisted of six logic ICs that performed Boolean operations such as OR and NOR (or NOT OR). (An OR gat e accepts two inputs; if either of them is 1, the output is also 1. A NOR gate is an OR gate followed by a NOT gate, otherwise known as an inverter; a NOR gate converts a 1 or a 0 into its opposite value. By stringing gates together in clever ways, engineers endow computers with the power to make decisions.)

The first ICs, or electronic chips, were very expensive, and it wasn’t until the mid-1960s that the prices descended to reasonable levels. For example, TI’s chips cost $50 to $65 apiece in lots of a thousand or more, approximately double that in small quantities. Only the government could afford to buy them in bulk, and the first ICs went to defense contractors and NASA. After the space agency picked Fairchild to supply the ICs for the Gemini capsule’s on-board computers, the company’s sales shot up. In ten years (between 1957 and 1967), Fairchild’s revenues rose from a few thousand dollars to $130 million, and the number of employees grew from the original eight to 12,000.

Of course, the computer industry also was keenly interested in the IC, which promised to bring about an exponential increase in the power and efficiency of their equipment. But the advent of the IC also presented an enormous challenge. The transition from the first to the second generation of computers from tubes to transistors – had taken place only a few years earlier. It had cost millions of dollars to redesign the machines to accommodate transistors; just as you couldn’t pull out a tube and install a transistor in its place, you couldn’t remove a transistor and insert an IC. You had to redesign the circuits from the floor up. The one exception was the read/write memory, which enjoys a more or less autonomous existence within the computer; as long as the memory met the appropriate operational requirements, you could replace magnetic cores with ICs with relative ease. “There was a lot of work on semiconductor memory at IBM,” recalled an engineer at the company’s Advanced Computing Systems Division in San Jose, California (not far from Fairchild), “and a small group inside the company saying that integrated circuits were the wave of the future…

but I wasn’t one of them at the time. I became a believer later. There were projections saying it would take all the sand in the world to supply enough semiconductor memory in order to satisfy IBM’s needs so many years out. IBM later became the first computer company to make the commitment to build memories with integrated circuits, but as for building logic circuits with ICs, there were simply too many unknowns. It was just too great a risk to commit the total corporation to integrated circuits for computer logic. In hindsight, you could say it was a bad decision, considering that the semiconductor companies soon got rather heavily into the computer people’s business. But at the time it was very justifiable. The selling point with the IC was low cost [though not at first], and IBM didn’t necessarily have to have the lowest cost. IBM sold on the basis of service and performance.

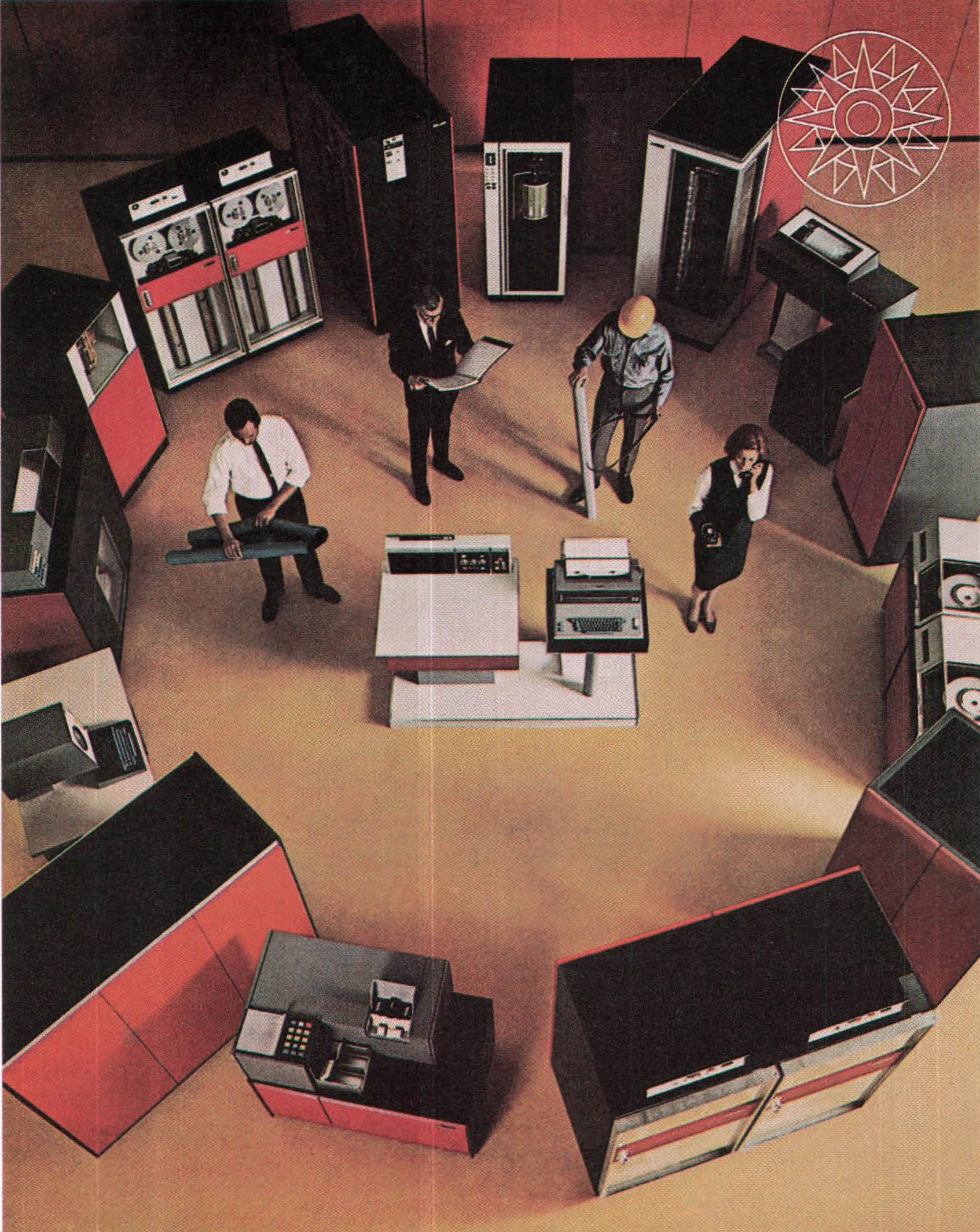

IBM, however, was caught in a bind. At about the same time the IC was making its debut, the company took a hard look at its burgeoning product line. It was turning out too many incompatible machines. A program written for one computer, even in FORTRAN, generally wouldn’t run automatically on another IBM computer, and this diversity put an unnecessary strain on the company’s resources. Nor was it possible to mix any of the peripheral equipment, such as magnetic-tape decks and high-speed printers. After a great deal of internal debate, the company decided to construct a comprehensive family of computers, with an array of compatible processors and software packages that would suit almost any application and budget. This meant that a small business could lease a small processor, secure in the knowledge that it could upgrade to a large processor without having to buy new peripherals and software. It also meant that a big company – for instance, a multinational bank – could buy dozens of compatible computers, machines that ran the same programs and used the same peripheral equipment.

The plan was sound, but had numerous drawbacks. First, it would render IBM’s existing computers and software obsolete. Second, it would lead other companies to introduce equipment and software that was compatible with the system, thus cutting into IBM’s sales. Third, it would require an enormous effort to carry out, costing billions of dollars and tying up IBM’s resources for years. If it failed – if the company couldn’t pull the operation off in a timely and profitable fashion – IBM might lose its dominance of the computer industry. Fourth, it caught IBM between two technological generations – transistors, on their way out, and ICs, on their way in. It seemed unwise to base the entire project on ICs, which were insufficiently proved, yet it wouldn’t do to use transistors. The alternative was to compromise with small ceramic modules that would incorporate several discrete components into the same unit.

Despite the problems, IBM decided to go ahead. The effort to build the System/360, as the line of computers was called, cost at least $5 billion over four years – $500 million for research and development and $4.5 billion for a new plant and equipment. “It was roughly as though General Motors had decided to scrap its existing makes and models and offer in their place one new line of cars, covering the entire spectrum of demand, with a radically redesigned engine and exotic fuel,” wrote Fortune magazine in September 1966. It was the largest private venture to date. IBM emerged from the ordeal with five new factories; 33 percent more employees (190,000 altogether); a components-manufacturing operation that was bigger than the entire semiconductor industry; and a truly international capacity for designing and making computers.

With six processors and forty peripheral devices, the System/360 was introduced on 7 April 1964. (The system later was expanded to nine processors and more than seventy peripherals.) It was the first family of computers, and it was an enormous success, attracting orders at the rate of almost 1,000 a month and reshaping the entire computer industry. Some customers, eager to get their hands on a 360, bought places on IBM’s lengthening waiting list from other firms, and a sizable plug-compatible industry arose, consisting of companies that made peripherals for the 360. Other firms, using a longer depreciation schedule than IBM, leased 360s from IBM and offered them to customers at lower rates. And several computer manufacturers, particularly RCA and General Electric, were sent into a spin and eventually withdrew from the business.

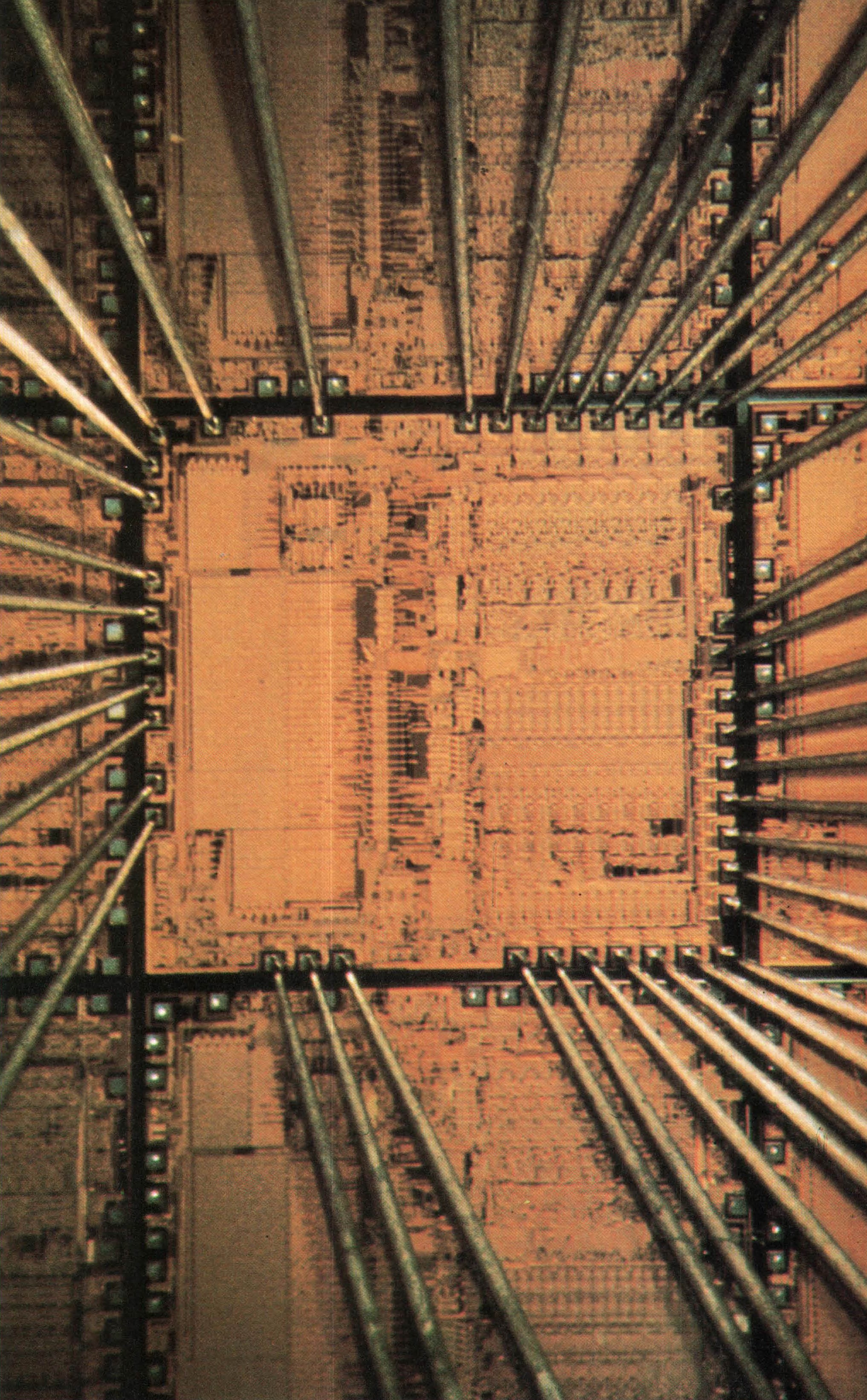

Meanwhile, IC computers started appearing in the mid-1960s. Burroughs incorporated chips into parts of two medium-sized computers introduced in 1966 (the B2500 and B3500); two years later, Control Data and NCR brought out computers composed entirely of ICs (the CDC 7600 and the Century Series, respectively). But it wasn’t until 1969 that ICs started showing up in IBM computers, and then only in the memories of the larger units of the 360 system. In the early 1970s, IBM replaced the 360 with the System/370, composed entirely of ICs. In general, the IC computers were hundreds of times more powerful and more reliable than their transistorized predecessors. They used less electricity, took up less space, and provided much more computational power for the purchase price.

At first, the IC’s impact on computers was limited. Although the number of transistors that semiconductor engineers managed to cram onto a chip doubled almost every year, the progression didn’t snowball until the early 1970s. The first 256-bit random-access memory, or RAM (a chip that serves as read/write memory, like a magnetic core), was introduced in 1968, and the first 1,024-bit, or 1K, RAM, came along several months later. (The memory capacity of ICs is measured in powers of 2, 1K being 210.) The 1K RAM was an important breakthrough; all of a sudden, it was possible to replace a significant amount of magnetic cores with a tiny IC, and magnetic-core memory, the mainstay of computers since the mid-1950s, started to disappear. And then, in 1971, a Silicon Valley engineer asked a pivotal question: Why not put a central processor on a chip? Why not indeed. The result was the microprocessor and, a few years later, the ultimate democratization of computer technology, the personal computer.