In August 1949, the Soviet Union exploded an atom bomb, and the Cold War suddenly became a much deadlier affair. The international situation was already tense; the Berlin blockade had ended only three months earlier; most of Eastern Europe was in Russian hands; China was about to fall to the communists; guerilla wars were raging in Greece and Turkey; and North Korea was making ominous threats against the southern half of the country. When, in September, the Truman administration broke the news about the Russian bomb, the disclosure provoked a wave of fear and confusion – a reaction that intensified with the equally frightful revelation that the Soviets had developed long-range bombers capable of crossing the North Pole and attacking the United States.

At that time, America’s air defense system was a patchwork of radar stations and control centers on the East and West coasts. Left over from World War II, it was utterly inadequate to the Russian threat. When the net detected a suspicious aircraft, for example, the nearest Air Force base was notified and a fighter was dispatched on an interception course plotted by an air traffic controller or a navigator. As long as it wasn’t confronted by many attackers, and as long as the intruders weren’t flying very fast or very low, the net was satisfactory. But the polar approaches over Canada and most of the United States lay outside the surveillance system, and the country would be helpless against a massive attack, particularly by bombers sneaking in under the radar. At low altitude, ground-based radar has a very short range, and the only way (in the late 1940s) to guard against low-flying aircraft was to set up a thick battery of radar stations, which only made the job of coordinating a defense much more difficult. Planes passing from one air sector to another could easily get lost in the shuffle.

Jolted by the Soviet threat, the U.S. Air Force appointed a civilian committee – the Air Defense System Engineering Committee – to study the country’s air defenses and to recommend a more effective system. In its final report, issued in October 1950, the committee called for, first, the immediate upgrading of the existing defense batteries and, second, the development of a comprehensive computerized defense network for North America. The project promised to consume billions of dollars, but the committee ‘s recommendations reflected a national consensus to protect the United States at any cost. So, in December, the Air Force asked MIT – the inventor of Whirlwind, the world’s only real- time computer – to establish a research center to design the net and supervise its construction. MIT was promised all the money it needed.

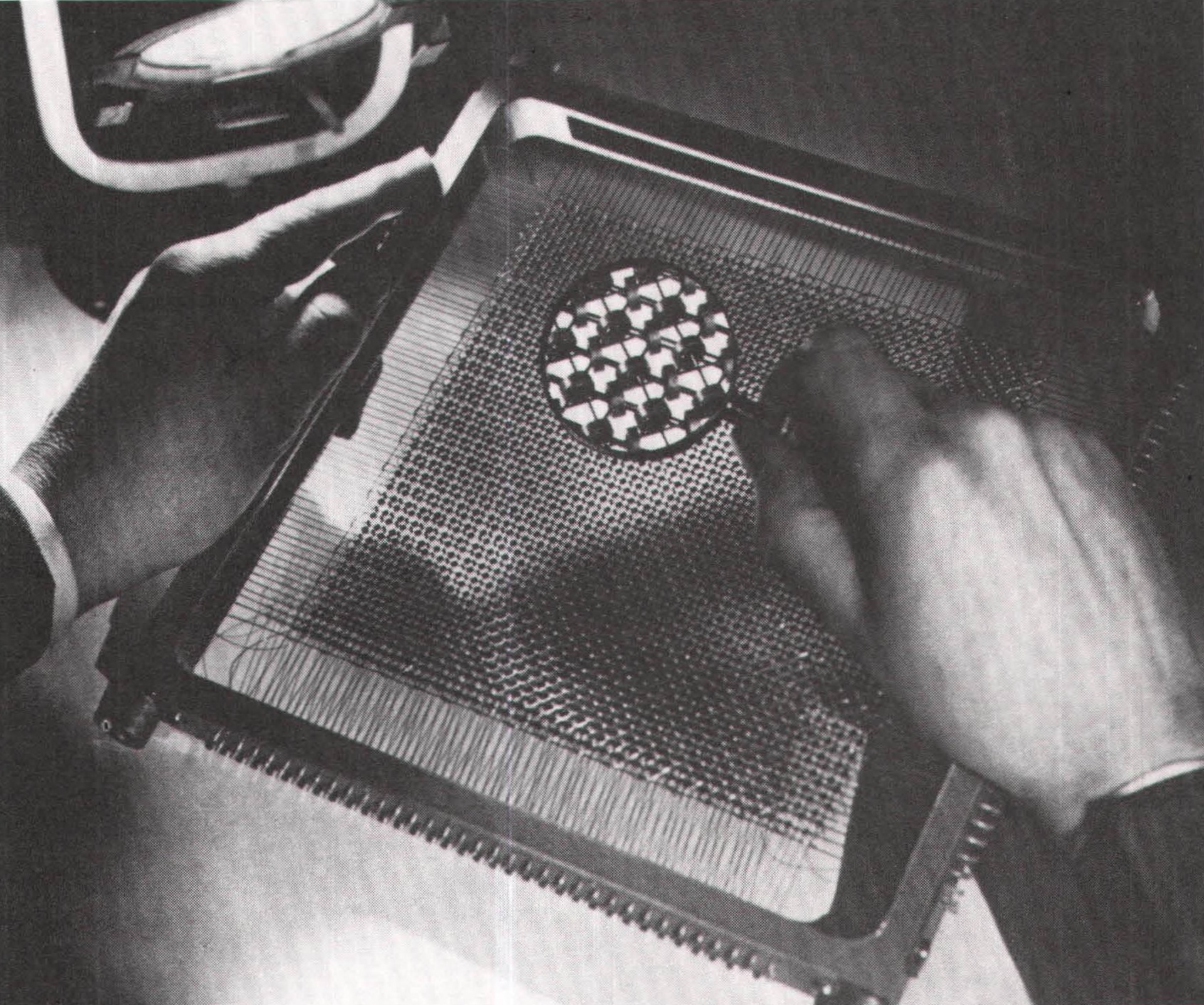

The system, which came to be known as SAGE, for Semi-Automatic Ground Environment, proceeded along several fronts. Even before the committee had released its report, Forrester’s Digital Computer Laboratory was taken over by the Air Force (much to the delight of ONR, which had grown tired of footing the bills) and Whirlwind became the prototype of the SAGE computer. Forrester and his crew had to invent an entire technology. Computer monitors – televisionlike screens – were developed to display tracking aircraft and to provide a means for communicating directly with Whirlwind. And programs were written to enable Whirlwind to keep track of aircraft and to compute interception courses automatically.

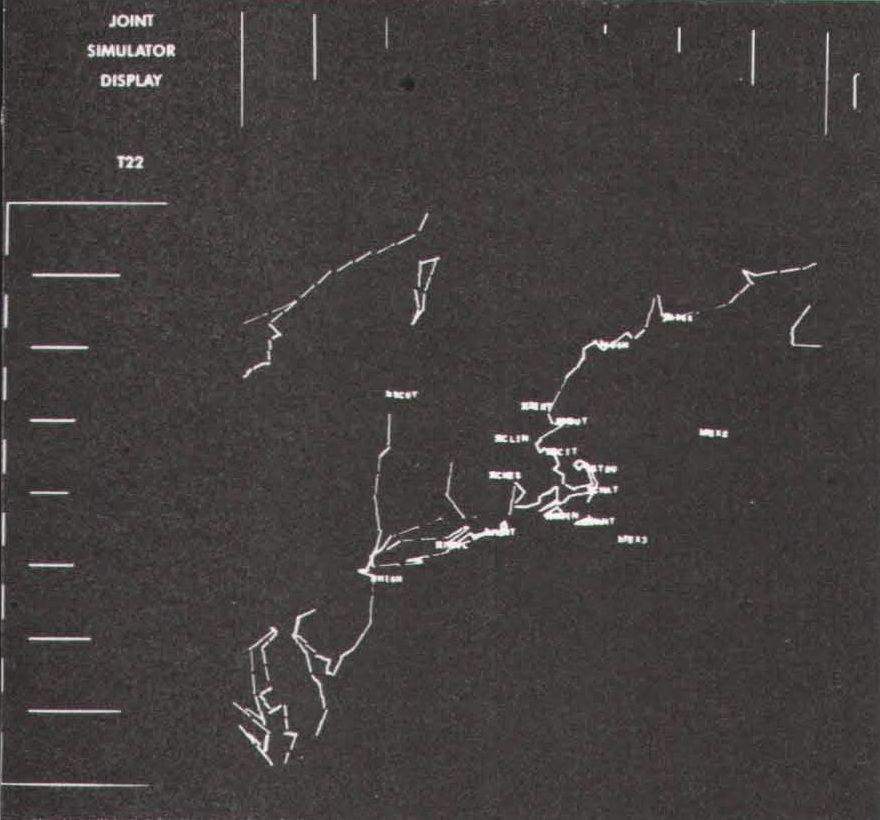

On 20 April 1951, Whirlwind was put to the test. Two prop-driven planes took off into the sky above Massachusetts, one plane acting as the target, the other as the interceptor. The target plane was picked up by an early-warning radar and displayed on Whirlwind’s monitor in the form of a bright spot of light, designated “T,” for target. Meanwhile, the interceptor, forty miles away, was shown on the screen by a spot labeled “F,” for fighter. At the direction of the air traffic controller, Whirlwind automatically computed an interception course, and the controller steered the fighter pilot to the target by radio. Whenever the target changed course, the controller radioed new directions to the pursuing fighter. Three interceptions were tried that day, and Whirlwind brought the fighter to within 1,000 yards of the target every time. In a much more challenging test two years later, Whirlwind managed to keep tabs on forty-eight aircraft.

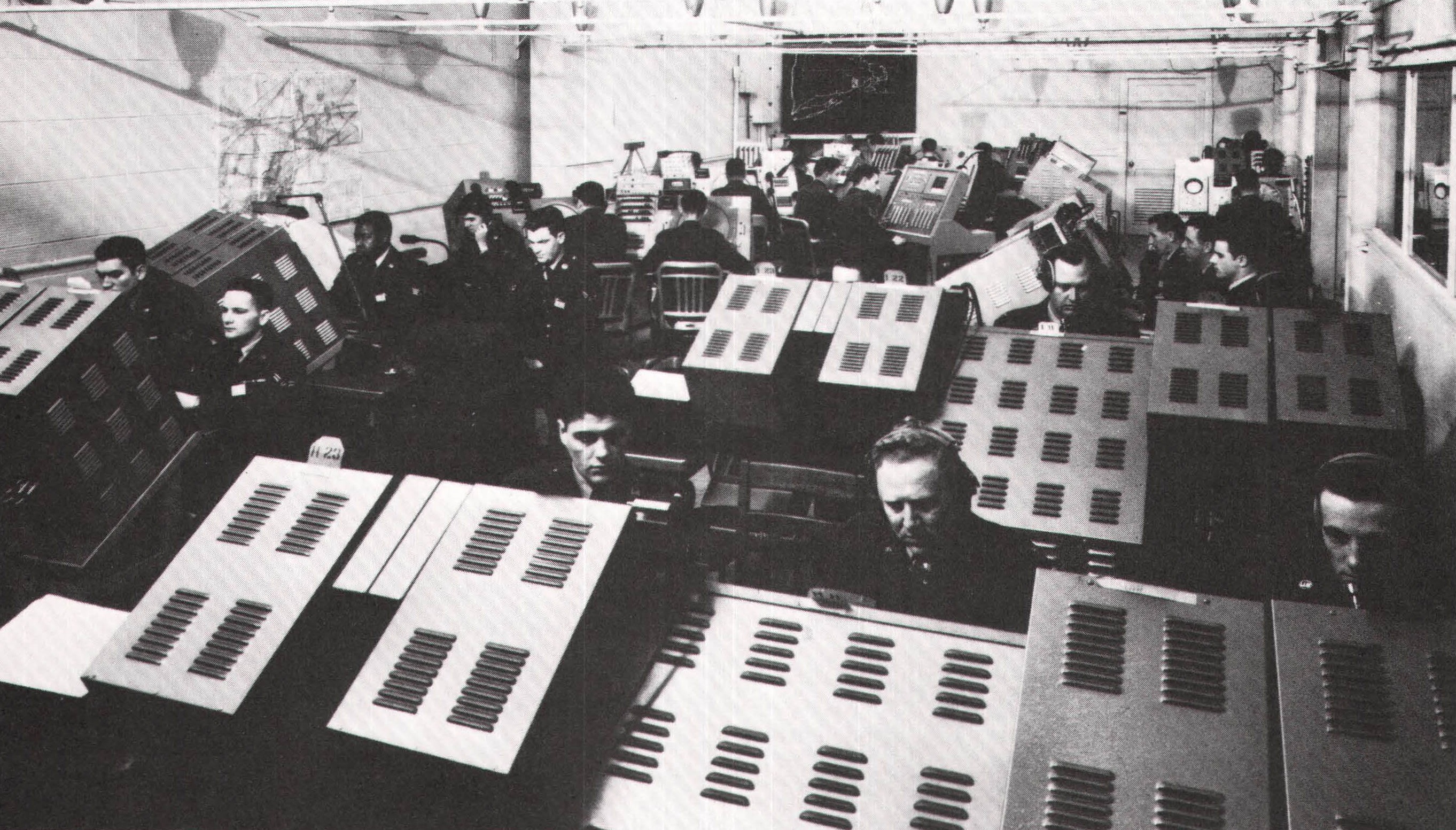

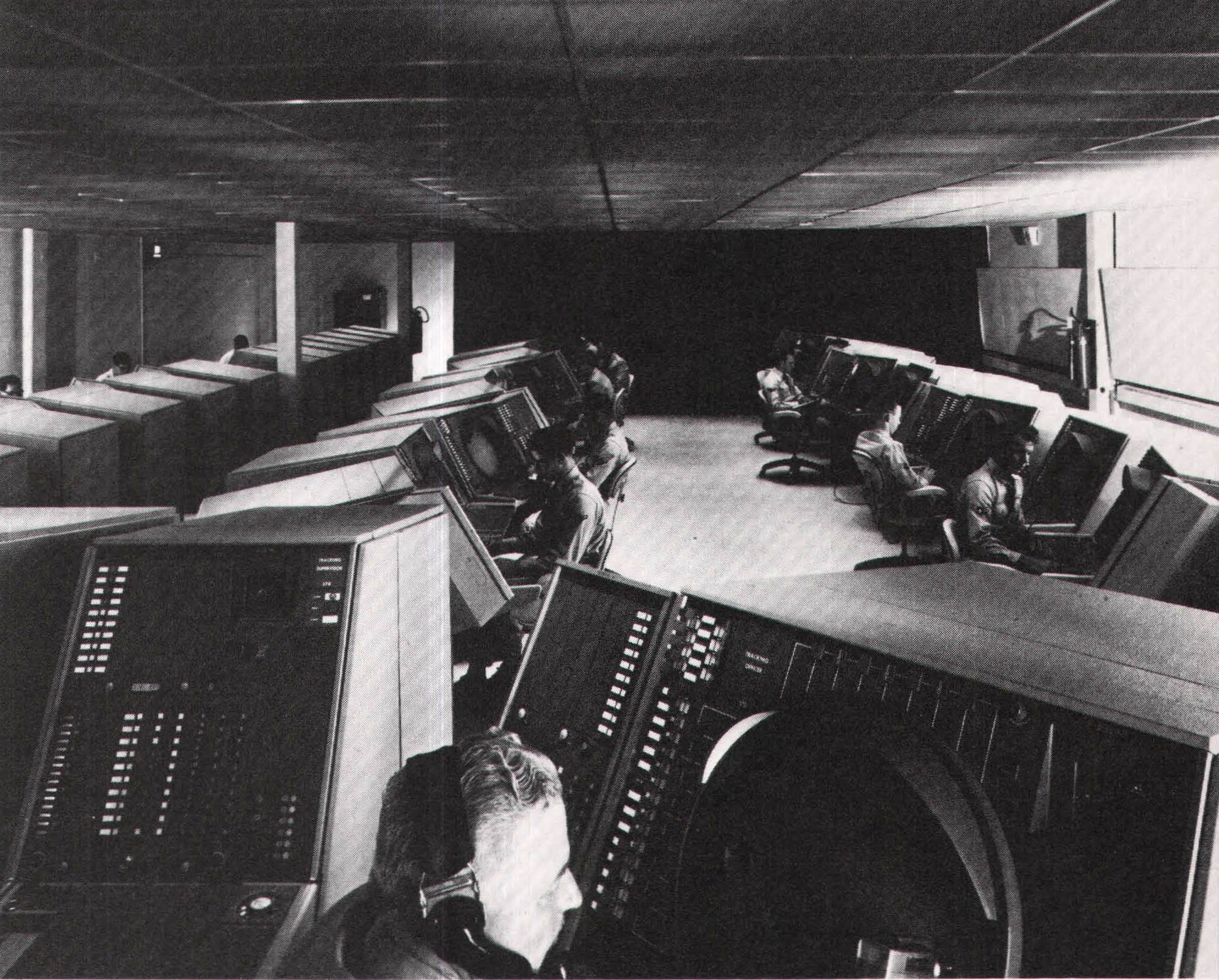

Meanwhile, at Lincoln Laboratory, the large research center MIT had established in Lexington, Massachusetts, to supervise the development of SAGE, scientists and military planners were trying to work out a general defense plan. In the end, they decided to divide the United States and Canada into twenty-three air sectors. All but one of the sectors were in the United States, with the twenty-third centered at North Bay, Ontario, guarding the northern approaches to the continent. Each sector would have its own direction center, a bomb-proof shelter where Air Force officers, using a real-time computer like Whirlwind, would monitor the skies and, if necessary, fight off an attack. There would also be three supreme combat centers, where the nation’s overall defense would be coordinated. (Another center was installed at Lincoln Lab for ongoing research and development.)

Forrester became the chief engineer of the SAGE computers – he had come a long way since the days of the Navy’s trainer- analyzer. An advanced version of Whirlwind – Whirlwind II – was built, and the SAGE computer moved a step closer to production. By the end of 1952, Lincoln Lab had started searching for a prime contractor to build the computers, and, not surprisingly, picked IBM. As the biggest data-processing firm in the country, it had the resources, the engineers, and the management to take on an enormous project like SAGE. And IBM gained much more than money from the contract; it received a front row seat on the latest and most important developments in computer technology. Magnetic-core memories appeared in commercial IBM computers (the 704, in 1955) before showing up in other companies’ products, and IBM became the leading industrial expert in real-time applications.

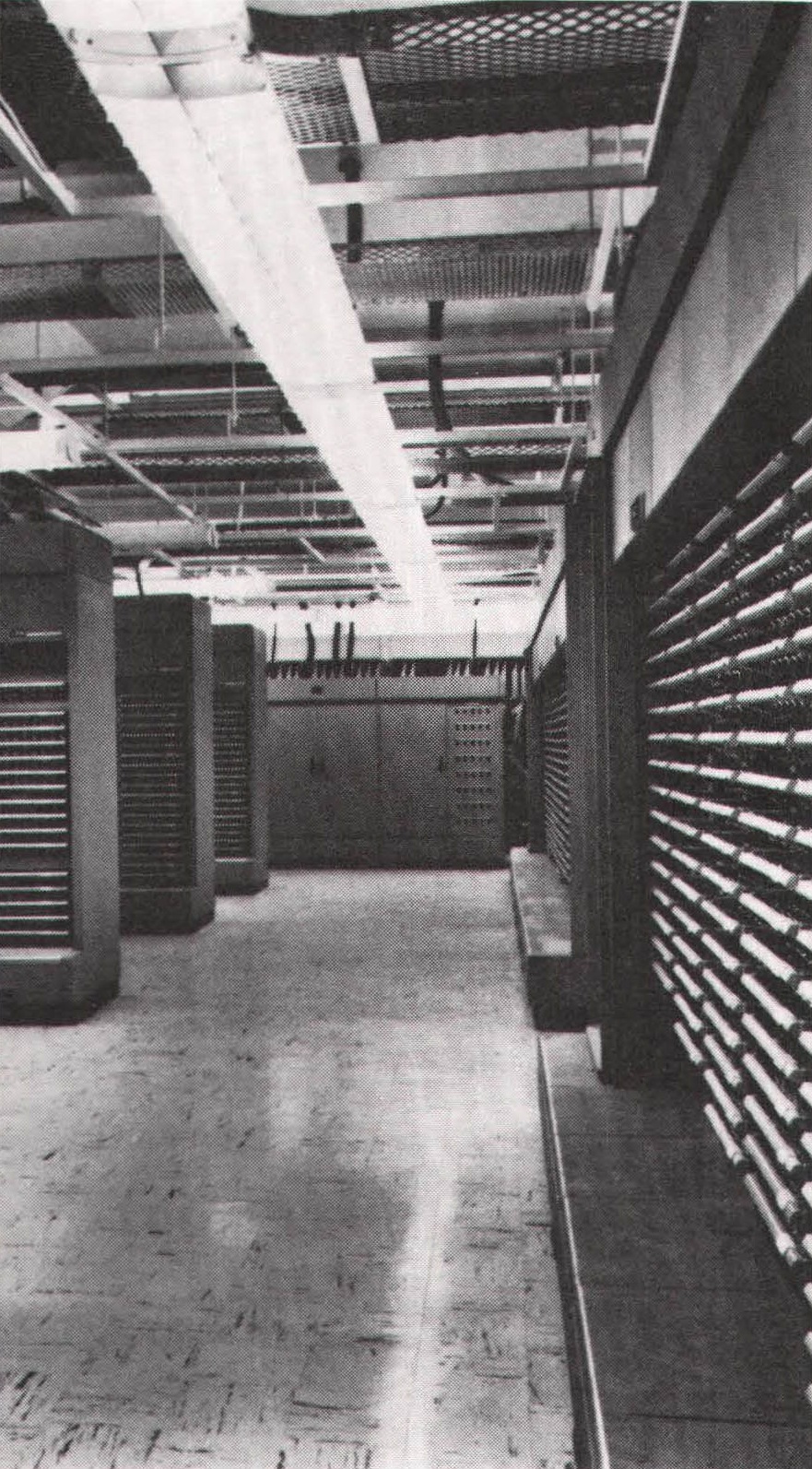

In 1955, Whirlwind II was superseded by another prototype of SAGE, designed by MIT’s Digital Computer Lab and IBM and built at IBM’s Poughkeepsie, New York, factory. Despite the computer’s high reliability, it was not, obviously, infallible; yet any operational failure of a SAGE computer, no matter what the reason, was unacceptable. The machines had to run twenty-four hours a day every day of the year, and a breakdown during an attack might lead to a catastrophe. Rethinking its original plans, which called for a single computer at each direction center, Lincoln Lab decided that the SAGE computer should be duplexed. In other words, each computer would contain two central processors, central units, and read/write memories, but share the same input, output, and bulk storage facilities. Thus, if one processor broke down, the other would kick in immediately.

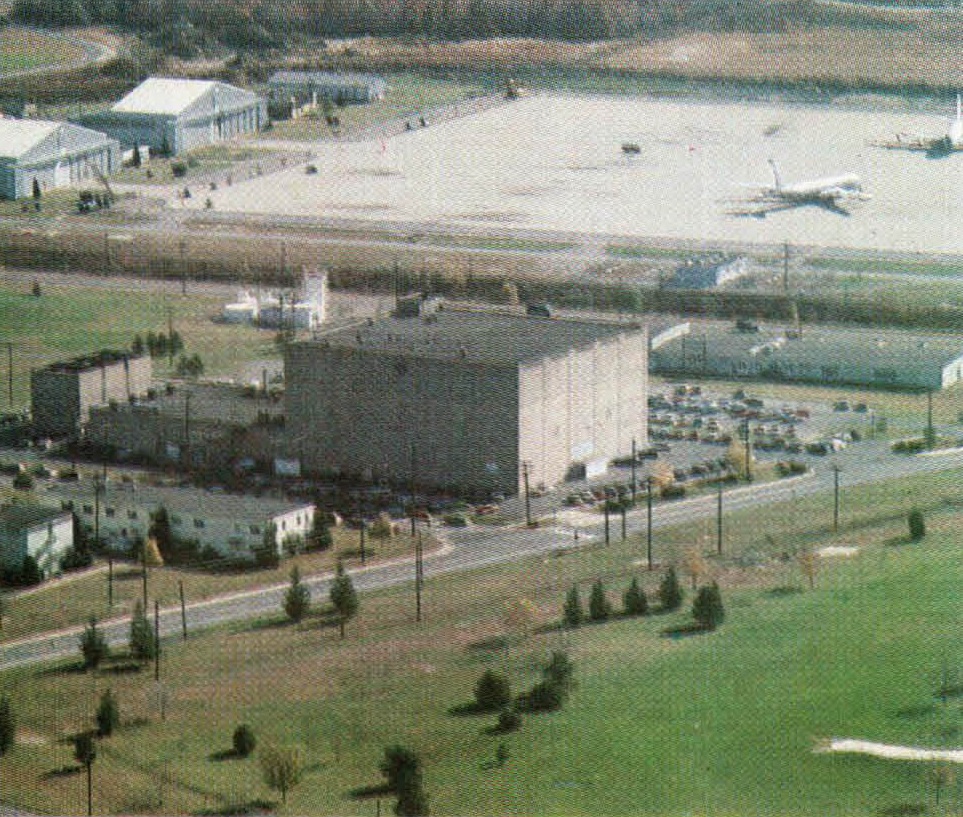

In July 1958, the first SAGE center, a grim, windowless, four-story concrete blockhouse, went into operation at McGuire Air Force Base in New Jersey. Twenty-six other centers were built over the next five years. Each center contained communications equipment, air conditioners, electrical generators, sleeping quarters, and a SAGE computer. Consisting of 55,000 tubes – more than any other computer, before or since and weighing 250 tons, a SAGE computer could run fifty monitors, or workstations; track as many as four hundred airplanes; store one million bits of data in internal and external memory (magnetic cores and magnetic drums); and communicate with up to one hundred radars, observation stations, and other sources of information, combining the data to produce a single, integrated picture of whatever was going on in the sky.

The SAGE computers were remarkably reliable, out of order a mere 3.77 hours a year, or 0.043 percent of the time. In large part, SAGE’s reliability was the result of a clever tube-checking technique, developed for Whirlwind, that detected weak tubes before they gave out; and of a fault-tolerant system that, by rotating signals to redundant components, enabled the computer to work properly even when certain parts failed. Since SAGE never had to deal with a real attack, we don’t know how foolproof the system actually was. (For example, an atomic blast in the atmosphere above a SAGE center probably would have damaged the computer ‘s circuits and communications lines.)

SAGE was under almost constant development and refinement. As new weapons, such as ground-to-air missiles and surveillance satellites, appeared, they were incorporated into the system. And when advances in electronic technology led to the invention of the miniaturized solid-state components called integrated circuits, or ICs (also known as microchips), the SAGE computers were dismantled one by one. By January 1984, twenty-six years after the construction of the first SAGE center and an eon in the short history of computers, the last installation closed down. But most of SAGE’s huge blockhouses are still standing, relics of the Cold War. “The buildings of the direction centers were formidable to look at,” recalled Norman Taylor, a SAGE engineer:

Bob Everett and I used to drive to Poughkeepsie and back almost every week for about three years…One night we were coming home at about two o’clock in the morning and we were talking about what is important, what will be important, and where do we go from here. Bob said, “You know, Norm, you and I will be buried in some cemetery, and some guy will walk by those buildings and he’ll say, ‘What the hell do you suppose those guys had in mind?’'”

Aside from ENIAC and von Neumann’s IAS machine, which established the paradigm of the stored-program computer, Whirlwind was the source of more significant technological innovations than any other computer. As the first real-time computer, Whirlwind led the way to such computational applications as air traffic control, real-time simulations, industrial process control (in refining and manufacturing, for example), inventory control, ticket reservation systems, and bank accounting systems. It gave birth to multiprocessing, or the simultaneous processing of several predetermined programs by different units of the machine, and computer networks, or the linking of several computers and other devices into a single system. It was the first computer with magnetic-core memories and interactive monitors. And it was the first sixteen-bit computer, which paved the way for the development of the minicomputer in the mid-1960s (as we shall see in the next chapter).

Almost all of these innovations were incorporated in SAGE (which was, however, a thirty-two-bit computer), along with some new ones, such as back-up computers and fault-tolerant systems. But SAGE’s technological significance doesn’t derive from any particular technical innovation or set of innovations. Rather, it stems from a lesson. Above all, SAGE taught the American computer industry how to design and build large, interconnected, real-time data-processing systems. Through SAGE, Whirlwind’s fabulous technology was transferred to the world at large, and computer systems as we know them today came into existence.

In 1954, while SAGE was under construction, IBM began work on a real-time computer seat-reservation system for American Airlines. A much smaller version of SAGE, it was known as SABRE, or Semi-Automatic Business-Related Environment. It consisted of a duplex computer – one in constant use, the other standing by in case of trouble – and 1,200 teletypes all over the country, linked via the phone lines to the airline’s computing center north of New York City. The system took ten years and $300 million to develop, and wasn’t in full operation until late in 1964. At that time, it was the largest commercial real-time data-processing network in the world. By the end of the decade, real-time computer systems were commonplace in industry, academia, and government.